Testing Cisco's Next-Gen Mobile Network

In part one of a huge testing program, we put Cisco's IP backbone and IP radio access network (RAN) to the test

August 30, 2010

The future of network communications is mobile – where wireless broadband technologies will be ubiquitously available, scaling to the whole world’s needs, and delivering revenue-generating video and data applications to diverse subscriber groups. (See Cisco: Video to Drive Mobile Data Explosion.)

Well, that is at least the joint goal of operators and vendors. The main question is: How do we get from today’s voice-driven networks – some struggling already under smartphone loads by a minority of their subscriber base – to tomorrow’s heavily broadband-enabled solutions for all? (See AT&T Intros Mobile Data Caps.)

How can such vast bandwidth be delivered to the growing number of cell towers cost effectively? How will this beast scale in the mobile core? Are any operator-provided data applications generating more revenue than adding cost? And if so, should the network be neutral, or should it favor such applications?

Lifting the cover off mobile testing

Light Reading and the European Advanced Networking Test Center AG (EANTC) thought it is about time to add some objectivity and facts to the discussion. At this point, most network knowledge is contained inside vendor labs and mobile operator network design departments, under non-disclosure agreements. Our goal was to stage an independent, public test of a vendor’s complete mobile infrastructure – including the wired backhaul (from cell sites to the mobile service nodes), the network core (connections among service functions and to the Internet), and the so-called “mobile core” with the large-scale packet and voice gateways. (See Juniper Challenges Cisco in the Mobile Core.)

Cisco Systems Inc. (Nasdaq: CSCO) was the first vendor to approach us with an offer to stage and test all these functions. This came as a surprise, because Cisco has not been well known as a premier mobile infrastructure supplier in the past, but things changed with the Starent acquisition. (See Cisco to Buy Starent for $2.9B and Cisco/Starent Deal Hurts Juniper.)

And Cisco has a history of independent public tests with Light Reading:

Testing Cisco's Media-Centric Data Center

Video Experience & Monetization: A Deep Dive Into Cisco's IP Video Applications

Testing Cisco's IP Video Service Delivery Network

Testing Cisco's IPTV Infrastructure

40-Gig Router Test Results

Indeed, Cisco's commitment to public, independent tests is well known. But it need not be the only vendor we hear from and report on: Participation in this test program is open for any vendor.

About EANTC

EANTC is an independent test lab founded in 1991 and based in Berlin. It conducts vendor-neutral proof-of-concept and acceptance tests for service providers, governments, and large enterprises. Packet-based mobile backhaul and mobile packet gateway (GGSN) testing for vendors and service providers has been a focus area at EANTC since the mid 2000s. Carsten Rossenhoevel of EANTC has co-chaired the MEF 's mobile backhaul marketing working group since 2007.

EANTC’s role in this program was to define the test topics in detail, communicate with Cisco during the network design and pre-staging phase, coordinate with test equipment vendors (Spirent and Developing Solutions), conduct the tests at Cisco’s labs in San Jose, Calif., and extensively document the results in this, and a subsequent, report. For this independent test, EANTC was commissioned by, and exclusively reported to, Light Reading. Cisco did not review draft versions of the articles before publication.

Network under test

Cisco provided a complete, realistic mobile operator’s network for GSM/GPRS (2G), UMTS (3G), and Long Term Evolution (LTE) services spanning the mobile core, radio network controllers, and IP backhaul infrastructure.

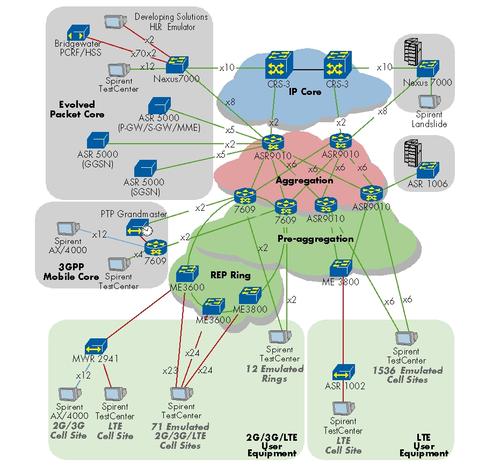

{videoembed|196330}The network was based on an EANTC requirements specification (black-box RFP) that we created as a blueprint for the common denominator of current and future mobile networks in the US, Europe, and Asia/Pacific. Specifically, Cisco selected the following equipment:

ASR5000 mobile gateway – contributed by the recently acquired Starent group

Cisco’s brand-new CRS-3 flagship carrier router including pre-production 100-Gigabit Ethernet equipment

Data center equipment such as the Nexus 7000

The ASR9000 and 7600 aggregation layer routers

Pre-aggregation systems such as the ASR1000, ME3600, and the unreleased ME3800 families

Cell site gateways like the MWR2941.

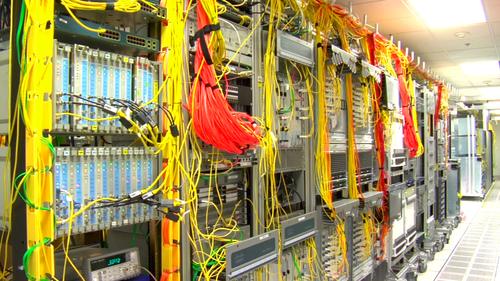

Base stations, subscribers, and additional access rings were emulated. All the components were racked in a Cisco lab, taking 13 full 19" racks. The scale of the network was sufficient to cater to more than 1.5 million active mobile subscribers across more than 4,500 emulated base stations. The tests were planned since December 2009 by a permanent project team of eight people and executed in June and July 2010 with a team of more than 30 experts.

Base stations, subscribers, and additional access rings were emulated. All the components were racked in a Cisco lab, taking 13 full 19" racks. The scale of the network was sufficient to cater to more than 1.5 million active mobile subscribers across more than 4,500 emulated base stations. The tests were planned since December 2009 by a permanent project team of eight people and executed in June and July 2010 with a team of more than 30 experts. Before we get to the test result highlights, here’s a hyperlinked list of this report's contents:

Before we get to the test result highlights, here’s a hyperlinked list of this report's contents:

Page 3: Testing Hardware Used

Page 5: QoS in the IP-RAN

Page 6: Route Processor Redundancy

Page 9: All-IP RAN Scale

Page 10: 100GbE Throughput

Page 11: NAT64 Session Setup Rate & Capacity

Page 12: NAT64 Module Redundancy

Results highlights

We validated an impressive range of Cisco products for most of the pieces of the mobile puzzle. Cisco showed that they truly support all mobile network generations – from GSM (2G) and current generation UMTS (3G) to Long Term Evolution (LTE), the next generation of radio technology. Without exaggerating, our nine-month exercise was by far the largest and most in-depth public, independent, third-party test of mobile infrastructure vendor performance ever.

Cisco showed reassuring scalability and state-of-the-art functionality across all technologies and products. Specifically, the mobile core tests of the ASR5000 showed that the Starent acquisition was a smart move, adding a rock-solid and mature product to Cisco’s offerings. A couple of brand-new products put to test showed some teething trouble, such as the ME3800 access router and the CRS-3’s 100GigE interfaces – all of which were resolved during the test. These bugs discovered during the EANTC tests were actually a feature: They allowed us to rate how fast Cisco’s engineering and quality assurance teams are, and how much of the engineering knowledge is internal as opposed to pure OEM products or third-party component assembly as seen by other vendors.

Integration of all these components into a consistent and working network design is a major aspect of the challenge: The various business units involved had to align their language and understand mutual protocol support. Some new product lines supported LTE greenfield deployment cases; other products were presented for the 2G/3G/LTE migration case – I guess one cannot have it all at the same time. In surprisingly few cases, our test became a Cisco-to-Cisco interoperability exercise – nothing specific to Cisco, according to our experience, but rather a reality across large industry players.

Now, is LTE deployment ready for prime time? Well, it depends. As far as we can tell from this very extensive lab test, the building blocks for key components in the backhaul and core wireline infrastructure and in the mobile core exist and are scaling right now. But, in the dry language of mathematics, these are mandatory, not necessarily sufficient, preconditions. The integration of actual charging and billing functions, provisioning and fault management aspects, etc., remain in the realm of future work for the individual mobile operator.

How could we summarize our findings, now that we are digesting what Cisco served us? As you can tell, the EANTC team is hard to impress – a bit like the prototypical Michelin Guide restaurant critic who leans back after a 12-course feast including oysters and black caviar, raspberry soufflé, and foamed espresso – comparing the experience with the other famed restaurants he has seen. This test really was outstanding, a true industry-first, an innovative and courageous undertaking, and, after all, an exciting experience for everybody involved. We have the pleasure to share the wealth of results and insight into Cisco’s solutions here. Cisco’s products tested are not exactly like black caviar... but we liked the soufflé. Full stop.

— Carsten Rossenhövel is Managing Director of the European Advanced Networking Test Center AG (EANTC) , an independent test lab in Berlin. EANTC offers vendor-neutral network test facilities for manufacturers, service providers, and enterprises. Carsten is responsible for the design of test methods and applications. He heads EANTC's manufacturer testing,certification group and interoperability test events. Carsten has over 15 years of experience in data networks and testing. His areas of expertise include Multiprotocol Label Switching (MPLS), Carrier Ethernet, Triple Play, and Mobile Backhaul.

Jambi Ganbar, EANTC, managed the project, executed the IP core and data center tests and co-authored the article.

Jonathan Morin, EANTC, created the test plan, supervised the IP RAN and mobile core tests, co-authored the article, and coordinated the internal documentation.

Page 2: Introduction to Network Backbone & IP Radio Access Network Tests

We broke up the content of this massive test into two pieces: This is the first of two test features, and this one deals with the network infrastructure backbone and IP radio access network (RAN) tests.

The second article – to be published soon – will focus on mobile core and application services testing.

The first section represents the "traditional Cisco" part: to design and build wireline data networks for integrated and sometimes application-aware services. We emulated 2G, 3G, and LTE mobile application data flows only – to keep the scale requirements and the complexity of the tests at a reasonable level. Nevertheless, wireline residential and business applications for DSL and cable networks, such as the ones focused in our Medianet test, could be added by increasing the number of nodes and links. (Cisco’s network design is based on Multiprotocol Label Switching (MPLS), similar to the Medianet test.)

Whenever we have asked service providers about the major challenges for packet-based backhaul networks, the responses were consistent: clock synchronization, scale of evolved 3G and LTE data traffic, service level guarantees for voice traffic, and multi-point service types required by LTE. At the same time, all the major markets worldwide see multiple GSM operators, so competitive pricing is a key aspect above all. Depending on whom you ask, there is a certain sense of urgency to different aspects:

The North Americans mostly wrestle with interactive data traffic and improving network coverage – translating into more cell sites and more (Ethernet/IP) bandwidth to each of them. It does not come as a surprise that they trust in the US government’s willingness to deliver clocking through Global Positioning System (GPS) all the time, so network-based clock synchronization is not a major topic for them. (See Telecom Market Spotlight: North America.)

The Europeans have had GSM and UMTS networks for a long time, resulting in a huge amount of legacy E1-attached equipment that needs to be serviced at lower cost in the future. The run for mobile broadband services with laptop dongles has become a major competitive differentiator – the operator with the best backhaul and infrastructure core will win most business subscribers. Subscribers seem to be aware that the downside of network neutrality is a lack of guaranteed service levels so traffic management of bulk services (such as non-interactive HTTP and peer-to-peer applications) is mostly accepted below the surface and is a matter of fact. (See Telecom Market Spotlight: Europe II and Telecom Market Spotlight: Europe.)

European networks have a large number of Picocell sites in buildings and underground public transport, so network-based clock synchronization is a key asset to save backhaul cost (if it works).As diverse as regional markets are in the Middle East and Asia/Pacific, they share a need for very competitive, large-scale deployments. In most areas, pricing is very low, down to $10/month for voice and $40/month for flat rate data services – and the average revenue per user (ARPU) keeps dropping. At the same time, countries such as India, Indonesia, and China are adding tens of millions of new subscribers each quarter. The major needs in this area are all-in-one, low-cost, large-scale networks. It would be good to add some better call quality in some areas (guaranteeing voice quality in the network and cell site availability), as a side-note requirement for the future. LTE is only a concern for the more elaborate markets like Singapore. (See A Guide to India's Telecom Market, Telecom Market Spotlight: Middle East, India Adds 18M Subs in June, SingTel Shows Off LTE, and Telecom Market Spotlight: Asia.)

How did we map all these requirements into a single test setup? It was a straightforward exercise, since we always keep the pricing aside: We just added all the market requirements to the design requests we sent to Cisco. Our network had to be ready for large-scale data services, feature 2G/3G/LTE migration, provide clock (frequency and phase) synchronization at its best, and it had to be resilient in the backbone and the access and show differentiated quality of service.

Once we delivered this challenge to Cisco in January this year, they chewed on it for awhile and returned a design and implementation guide to us a month later. Cisco used this guide, now in its 30th version after numerous joint revisions, as the basis of the test network.

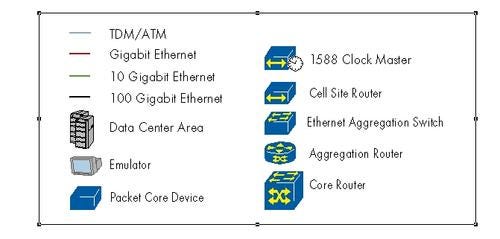

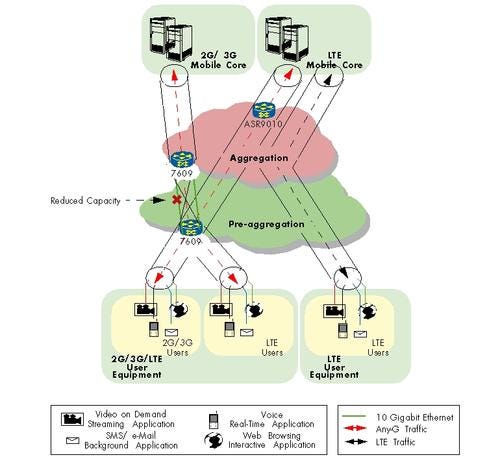

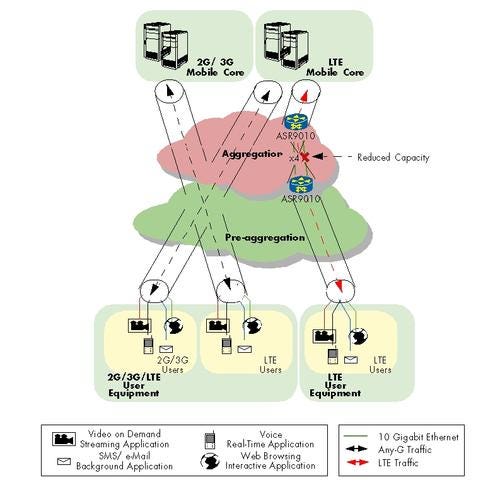

The diagram above is the overall physical topology of all nodes in the network and the directly attached test equipment (more details on the test gear on the next page). The ASR 5000s, taking on a different role depending on whether the network was configured for LTE or 3G (or in the case of a handover demo – both, amongst a pair of devices), are labeled as such in their test-specific diagrams, which can be found in the next article of this series.

The diagram above is the overall physical topology of all nodes in the network and the directly attached test equipment (more details on the test gear on the next page). The ASR 5000s, taking on a different role depending on whether the network was configured for LTE or 3G (or in the case of a handover demo – both, amongst a pair of devices), are labeled as such in their test-specific diagrams, which can be found in the next article of this series.

From the user side up, it is clear that Cisco is using two distinct models in the same network – one for those looking to deploy a hybrid of 2G/3G/LTE, and another for greenfield LTE deployments. Each has its own parallel access and initially parallel aggregation systems (7609 on one hand, ASR 9010 on the other). However these converge for LTE traffic headed to the Evolved Packet Core (EPC). Finally, up top one will find the unified IP core, where post-mobile-core traffic of any mobile technology would go out to the Internet. The tables below outline how many emulated cell sites of each type we had, what services were emulated behind each, and how many users (or streams) were created for that emulation. (See Evolved Packet Core for LTE.)

Table 1: 2G/3G/LTE Traffic Table

Traffic Type | Description | Downstream Bandwidth per Session (Mbit/s) | Sessions per Tower | Bandwidth per Tower (Mbit/s) | Packet Size (Bytes) | DiffServ Class |

2G(TDM) | Voice and Data | 0.025 | 226 | 5.67 | 280 | Expedited Forwarding |

3G (ATM Data) | Voice | 0.020 | 406 | 8.12 | 512 | Best Effort |

3G (ATM - Real time) | Data | 0.134 | 95 | 12.77 | 64 | Expedited Forwarding |

LTE (Real time - Network) | X2 Interface (control plane) | 0.026 | 11 | 0.29 | 1,024 | Expedited Forwarding |

LTE (Real time - User) | Voice | 0.020 | 40 | 0.80 | 64 | Expedited Forwarding |

LTE (Streaming) | IP Video, Multi-media, Webcast | 0.342 | 10 | 3.42 | 1,024 | Class 4 |

LTE (Interactive) | Web browsing, data base retrieval, server access | 0.323 | 8 | 2.58 | 512 | Class 2 |

Number of Any-G Cell Sites: 864 |

Table 2: All-IP LTE Traffic Profile

Traffic Type | Description | Downstream Bandwidth per Session (Mbit/s) | Sessions per Tower | Bandwidth per Tower (Mbit/s) | Packet Size (Bytes) | DiffServ Class |

LTE (Real time - Network) | X2 Interface (control plane) | Not seen by transport network | 1,024 | Expedited Forwarding | ||

LTE (Real time - User) | Voice | 0.020 | 271 | 5.42 | 64 | Expedited Forwarding |

LTE (Streaming) | IP Video, Multi-media, Webcast | 0.352 | 67 | 23.59 | 1,024 | Class 4 |

LTE (Interactive) | Web browsing, data base retrieval, server access | 0.356 | 50 | 17.78 | 512 | Class 2 |

LTE (Background) | E-mail, SMS, peer-to-peer | 0.349 | 70 | 24.43 | 512 | Best Effort |

Number of All-IP Cell Sites: 1536 |

Page 3: Testing Hardware Used

It would have been slightly impractical for EANTC to ship 1 million handsets to Cisco labs for the test – and we admit that our team lacks the thousands of employees to operate them. So we had to take a smarter approach, partnering with Spirent Communications plc in emulating the base stations (whether E1, ATM, or Ethernet-attached), all individual subscriber traffic, and the Internet and voice traffic gateways. Spirent supplied a mind-bogging amount of emulator equipment – basically a shopping list of almost all of its products.

The test program challenged us to find a test vendor that could support the diverse areas we intended to look into. The test partner needed to support Layer 2-3 testing where packet blasting, marking, tracking, and a high level of scalability was a must. In addition, in order to support our mobile core testing, we needed testers that supported the latest 3rd Generation Partnership Project (3GPP) standards (including Long Term Evolution), were able to generate impairments and follow the ITU-T G.8261 profiles, and generate stateful Layer 4-7 traffic.

When we started the project in November 2009, we chose Spirent Communications because it was the only test vendor for all parts of the test. Spirent delivered several racks full of testers that included:

Spirent TestCenter – This tester was used for all Layer 2-3 traffic generation, scalability, and resiliency testing.

Spirent XGEM – This impairment generator was used to execute the ITU-T G.8261 synchronization testing as well as to generate unidirectional, realistic impairments mimicking transmission equipment in a service provider’s network.

Spirent Avalanche – The Avalanche was used for all tests that required stateful traffic and protocol emulation such as SIP.

Spirent AX4000 – To emulate ATM and E1 traffic.

Spirent Landslide – As this test was heavily focused on Cisco’s mobility solutions, we used the Landslide to emulate mobile users (user equipment) and SGSNs.

Spirent supported the testing on site with a team of engineers that worked shoulder to shoulder with EANTC and Cisco’s staff. With this in hand, we had all the building blocks to start the fun. Let’s see how Cisco performed...

Spirent supported the testing on site with a team of engineers that worked shoulder to shoulder with EANTC and Cisco’s staff. With this in hand, we had all the building blocks to start the fun. Let’s see how Cisco performed...

Page 4: Phase & Frequency Synchronization

Summary: Cisco's MWR2941 showed accurate frequency synchronization up to LTE MBMS requirements using a hybrid solution with IEEE1588 (Precision Time Protocol) and Synchronous Ethernet.

One of the biggest challenges facing carriers planning to use packet-based networks in mobile backhaul is clock synchronization of base stations with their respective controllers. In fact, there are a variety of requirements for time-of-day synchronization, frequency, and phase synchronization, from a variety of industries (financial, electrical, test & measurement, etc.).

For mobile providers, the requirement mostly comes from their need to align the frequencies of the base station air interfaces so that: a) they do not experience wander, losing connections to handsets; and b) a transaction can continue as the user is handed over from one base station to another.

In LTE, specific requirements are added for synchronizing the phase of the base station oscillators, in order (for example) to facilitate Multimedia Broadcast Multicast Service (MBMS) or enable efficient use of radio resources amongst groups of eNodeBs.

Traditionally, synchronization in mobile networks is provided by a synchronous physical interface (Layer 1) at the base station – which came for free with the legacy E1/T1 connections. However, this synchronization capability is not inherent to Ethernet as it is with traditional physical media. Therefore, with the move to Ethernet-based transport, carriers must use other methods to synchronize their base stations unless they are prepared to keep one legacy link to each cell site forever.

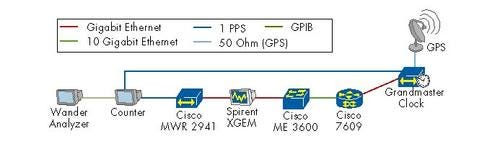

Two such technologies are Synchronous Ethernet (SyncE), which enables hop-by-hop physical layer synchronization, and the Precision Time Protocol (PTP) defined in IEEE standard 1588:2008 – a control protocol to pass sync information throughout the Ethernet/IP network. PTP does not require that devices be physically connected as SyncE does, and PTP also provides phase synchronization, which SyncE does not. However, SyncE can sometimes achieve a higher sync quality since it is taken from the physical interface at Layer 1, and also because, unlike 1588, network congestion is theoretically not a factor. For these reasons, Cisco has taken advantage of both protocols in what it calls a "hybrid synchronization" solution. Our goal was not to verify protocol conformance, but rather the end result – synchronization quality.

The industry standard for testing synchronization over packet switched networks is outlined in ITU-T recommendation G.8261. The standard defines methods of capturing and replaying network conditions that the synchronization solution should endure. Conditions for several realistic scenarios are defined in different test cases, three of which we chose to evaluate:

Test Case 13: Sudden drastic changes in network conditions are emulated – 6 hours.

Test Case 14: The emulated network slowly ramps up to conditions of heavy loads, and eventually ramps down to a calmer state once again – 24 hours.

Test Case 17: The network experiences failure, and thus a convergence scenario is emulated – 2 hours.

Each test case defines how such scenarios can be created in an artificial test environment with 10 generic Ethernet switches in a sequence, captured for how much frame delay, frame delay variation, and loss the network is subjected to. Since this setup takes a lot of hardware and does not generate reproducible results across different switches, we used an impairment device, the Spirent XGEM, to recreate the scenario for our testing purposes.

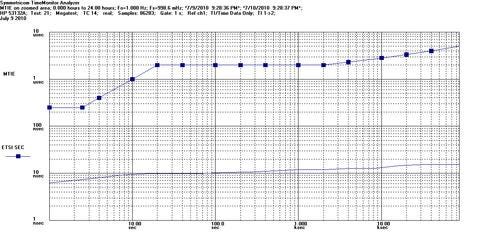

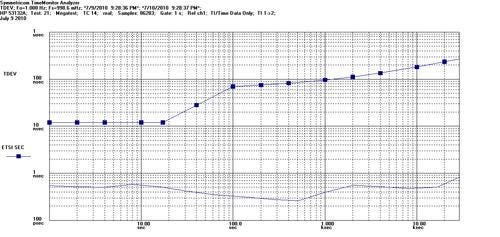

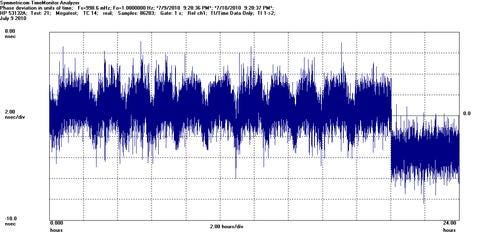

All the meanwhile, we measured the frequency and phase of the 1pps (pulse per second) output interface on Cisco’s MWR2941 cell site router, the clock slave, compared with that of the Grandmaster – Symmetricom’s TimeProvider 5000, which was connected to the Cisco 7600 in a regional data center. As normally recommended by most synchronization vendors, PTP packets were prioritized in the network – in this case based on the Priority bits in the VLAN header. Using Symmetricom Inc. (Nasdaq: SYMM)’s TimeWatch software, we then measured the quality in terms of Maximum Time Interval Error (MTIE), Time Deviation (TDEV), and raw phase – industry standards – against the ITU-T G.823 SEC mask.

Using Symmetricom Inc. (Nasdaq: SYMM)’s TimeWatch software, we then measured the quality in terms of Maximum Time Interval Error (MTIE), Time Deviation (TDEV), and raw phase – industry standards – against the ITU-T G.823 SEC mask.

In each case the relative slave clock MTIE, TDEV, or phase was graphed – the slave’s clock output interface relative to the master’s. For all stress tests, results stayed within the mask as expected. In most of the cases, MTIE did not increase noticeably over time, showing an oscillator optimized for several minutes up to an hour of disconnect from the master clock – an impressive result under load, possible only due to the smart combination of multiple sync techniques.

In the case of G.8261 Test Case 14, we did notice that the Cisco MWR 2941 had to recalibrate its oscillator automatically during the test to align with the shifting phase from the master, however it did so very smoothly and steadily, leaving the Symmetricom software to report a very even 1.0Hz for the phase of the interface, just as it had for the other two test cases. We have provided the phase, MTIE, and TDEV graphs for Test Case 14 here:

Page 5: QoS in the IP-RAN

Page 5: QoS in the IP-RAN

Summary: Here we demonstrated that the ASR 9010 and 7609 platforms could appropriately classify and prioritize five classes of traffic during link congestion.

In designing this network, Cisco had an easier task than when normally planning live networks: The users were emulated, and thus their network usage was static instead of bursty. The network was appropriately built to accommodate for this usage and did not experience congestion. In real-world practice, however, network areas will have both usage peaks and plateaus.

Specifically, base station locations are typically provisioned for peak usage – to handle the maximum load of users – however the backhaul network is typically not. The base station requirement comes from the reality of human location. In the case of events such as a sports event or concert, hundreds of users will be concentrated for a few hours.

Now you might say you have attended a large sports event before and never had issues with voice calls. Correct: The issue is primarily related to data traffic amalgamating the live experience at a growing rate. A few parallel video streams may saturate a single base station. Sure, outside of these hours, the local network resources may not be used much at all, however during an event if too many calls are lost or there is no connectivity, it would be a pretty negative experience for the user.

But to plan for full load throughout the aggregation and core networks is simply not worth the cost. Some routers can be saved with the cost of a few rare moments of congestion. For these rare moments, though, a crucial and well known feature is incorporated into packet backhaul networks: Quality of Service (QoS).

As a side note, LTE is designed with smart advanced mechanisms to circumvent network congestion during such usage peaks at the application layer by redirecting base station traffic – but the practicality of these mechanisms has not been widely tested yet to our knowledge, and they are not available for 2G and 3G networks anyway.

QoS describes the general ability to differentiate traffic and treat it differently in regard to its drop priority, drop probability, increased or decreased latency, and so on. The goal in this test was to verify that in a congestion scenario, the DUT would prioritize traffic accordingly. To prove that such prioritization could work in a realistic scenario, we used the master traffic configuration explained earlier on. In order to create congestion, we removed a single link in the network. We performed the test on two systems: Cisco’s ASR 9010 and 7609.

Table 4: Qos in the IP-RAN: 7609

Traffic Class | Mobile Generation | Application Type | Application Sample | Direction | Percent Packets Lost from this Class (all streams) | Percentage of Streams from this Class with Loss | Average Percentage Loss Amongst Affected Streams |

Best Effort | 2G (data) - 3G (ATM data) | Background | all data | UE� --> ME�� | No Loss | No Loss | No Loss |

Best Effort | 3G - LTE | Background | SMS, email, downloads | ME --> UE | 2.79% | 13.20% | 26.13% |

Assured Forwarding (cs2) | 3G - LTE | Interactive | Web browsing, data base retrieval, server access | UE --> ME | No Loss | No Loss | No Loss |

Assured Forwarding (cs2) | 3G - LTE | Interactive | Web browsing, data base retrieval, server access | ME --> UE | No Loss | No Loss | No Loss |

Expedited Forwarding | 2G (TDM) | Real time-user | all data | UE --> ME | No Loss | No Loss | No Loss |

Expedited Forwarding | 3G (ATM) - LTE | Real time-user | voice, control | ME --> UE | No Loss | No Loss | No Loss |

Assured Forwarding (cs4) | 3G - LTE | Streaming | multimedia, video on demand, Webcast applications | ME --> UE | No Loss | No Loss | No Loss |

Assured Forwarding (cs4) | 3G - LTE | Streaming | multimedia, video on demand, Webcast applications | ME --> UE | No Loss | No Loss | No Loss |

Expedited Forwarding (cs5) | LTE | Real time-network | X2 Interface | SideA -> SideB | No Loss | No Loss | No Loss |

* User Equipment |

In preparation for the test, we found that Cisco’s hash algorithm for load balancing on the 7609 and ASR 9010 works unevenly. This is a generic issue with hash algorithms across the industry – in Cisco’s case, though, some links were loaded at only 29 percent of line rate, while others were heavily used at 71 percent. We allowed Cisco to show us which links were under heavier load, guaranteeing that their removal would induce congestion on the router. In each case, links between similar device types were removed – between the 7609 connected to the aggregation ring and the 7609 connected to the PTP Grandmaster, and between the upstream ASR 9010 and downstream ASR 9010.

Once the link was chosen, it was calculated that in the ASR 9010 test the three lower classes would suffer from congestion, and in the 7609 test only one class (BE, Best Effort) would. This meant that zero packet loss was expected on any of the top three, or four, classes, respectively. The results were pretty straightforward. In the ASR 9010 test, we in fact saw zero packet loss on our top three classes (EF, CS4, CS5) and saw a decent percentage of loss, but not everything, occurring on the lower two priority classes (CS2, BE). In the 7609 test there was enough bandwidth for the top four classes to get through without loss, and only BE traffic observed loss.

Once the link was chosen, it was calculated that in the ASR 9010 test the three lower classes would suffer from congestion, and in the 7609 test only one class (BE, Best Effort) would. This meant that zero packet loss was expected on any of the top three, or four, classes, respectively. The results were pretty straightforward. In the ASR 9010 test, we in fact saw zero packet loss on our top three classes (EF, CS4, CS5) and saw a decent percentage of loss, but not everything, occurring on the lower two priority classes (CS2, BE). In the 7609 test there was enough bandwidth for the top four classes to get through without loss, and only BE traffic observed loss.

Table 5: Qos in IP-RAN: ASR 9010

Traffic Class | Mobile Generation | Application Type | Application Sample | Direction | Percent Packets Lost from this Class (all streams) | Percentage of Streams from this Class with Loss | Average Percentage Loss Amongst Affected Streams |

Best Effort | 2G (data) - 3G (ATM data) | Background | all data | Upstream | No Loss | No Loss | No Loss |

Best Effort | 3G - LTE | Background | SMS, email, downloads | Downstream | 7.59% | 5.02% | 99.15% |

Assured Forwarding (cs2) | 3G - LTE | Interactive | Web browsing, data base retrieval, server access | Upstream | No Loss | No Loss | No Loss |

Assured Forwarding (cs2) | 3G - LTE | Interactive | Web browsing, data base retrieval, server access | Downstream | 0.32% | 8.33% | 2.67% |

Expedited Forwarding | 2G (TDM) | Real time-user | all data | Upstream | No Loss | No Loss | No Loss |

Expedited Forwarding | 3G (ATM) - LTE | Real time-user | voice, control | Downstream | No Loss | No Loss | No Loss |

Assured Forwarding (cs4) | 3G - LTE | Streaming | mulimedia, video on demand, Webcast applications | Downstream | No Loss | No Loss | No Loss |

Assured Forwarding (cs4) | 3G - LTE | Streaming | mulimedia, video on demand, Webcast applications | Downstream | No Loss | No Loss | No Loss |

Expedited Forwarding (cs5) | LTE | Real time-network | X2 Interface | Between eNodeBs | No Loss | No Loss | No Loss |

Page 6: Route Processor Redundancy

Summary: Our tests show there was no BGP session or IP frame loss during component failover tests of the CRS-1 Route Processor Card.

Resiliency mechanisms within a single device are common practice in service provider deployment scenarios. The CRS-1, being such a central element in the Cisco Mobility solution, is exactly the kind of router that should be made as resilient as possible internally, without requiring a second identical router next to it. After all, the CRS-1 connects the mobile service data center with the network and the mobile core – minimizing failure in that router is an important goal.

The CRS-1 included two control plane modules, Route Processors (RPs) in Ciscospeak. Cisco claimed that when the active module experiences a catastrophic event that causes it to fail, the second module will completely take over, maintaining routing instances and causing no user to lose its sessions or any frames. Cisco calls the feature "Non-Stop Routing."

We connected the CRS-1 to 10 tester ports and established iBGP peering sessions on each port. IS-IS internal routing was configured as well. We sent bi-directional native IPv4 traffic between the ports that resembled what we expected traffic to look like when 4,500 cell sites were aggregated into the core of the network – adding up to 95.292 Gbit/s per direction of traffic. With this setup running, we failed the active RP by yanking it out.

We monitored our Border Gateway Protocol (BGP) sessions and traffic flowing through the Device Under Test (DUT), and confirm all BGP neighbors remained up, and there was no frame loss at any time. We repeated the test a total of six times to make sure the results we received were consistent. Each failure was followed by a recovery to make sure that the solution will work in revertive mode, recovering the original state as soon as reasonable.

In all our test runs we recorded no frame loss and no BGP session drops – all to Cisco’s great delight. The two RPs played well with each other and were able to synchronize their states and maintain their BGP sessions even when a card was pulled out. The test showed that the CRS-1, equipped with redundant route processors, is a resilient router able to continue its normal operation even in the face of major component failure.

Page 7: Hardware Upgrade (CRS-1 to CRS-3)

Summary: We performed a live switch fabric upgrade of a CRS-1 router to a CRS-3 under load, enabling 100-Gigabit Ethernet scale. The test resulted in less than 0.1 milliseconds out-of-service time – sometimes even zero packet loss.

The CRS-1 is certainly a large-scale router – not the type of equipment one would like to take out of service for a hardware upgrade. A lot of major backbone links will usually be connected, which cannot accept a service outage beyond half a minute per month (99.999% availability).

This is why in-service hardware upgrades are not only a nice-to-have thing in the core router business.

A switch fabric hardware upgrade becomes necessary from Cisco’s CRS-1 to CRS-3 to support 100-Gbit/s attached-line interface modules. Before, the switch fabric connectors each ran at 40 Gbit/s. The CRS-1’s mid-plane design is a feature at this point. It is possible to exchange the fabric cards but to leave the line interface modules in place. No re-cabling of user ports is necessary.

Cisco provided a CRS-1 4-Slot Single-Shelf System (referred to as CRS-4/S – the product’s part number). They stated that, while the router will be forwarding traffic and maintaining BGP sessions with the tester, a Cisco engineer will be actually replacing the switch fabric cards from the existing to the new cards (called FC-140G/S).

Let’s pause here for a moment.

The switch fabric on the CRS-1 is the equivalent to the engine of a car – it receives user data from Module Service Cards (MSCs) and performs the switching necessary to forward the data to the appropriate egress MSC. So, metaphorically speaking, we were going to remove parts of the engine while the car was on the highway and replace it with a much faster engine. We were sceptical, to say the least.

We started the test by sending native IPv4 traffic through the CRS-1 as well as setting up iBGP sessions on each of the 10 ports connecting the tester with the router. The upgrade process was initiated once traffic was flowing freely and without loss, and all BGP sessions were stable. Each switch fabric card had to be physically removed and replaced with the new FC-140/S fabric. We monitored the router’s command line interface (CLI) while the Cisco engineer replaced the fabrics. The system always recognized that the fabric was being removed and that the new fabric was being inserted. We repeated the whole test sequence for a total of three times to make sure that the results were consistent, each time replacing all four switch fabric cards.

Each upgrade test ran for 20 minutes. We could confirm that our BGP sessions never went down. The first test run showed no frame loss as expected. In the following two runs we recorded some minor loss. In the second run we lost 9 frames, and in the third test run we lost 2,857 out of more than 20 billion frames.

Based on our experience, service providers like to upgrade their network components during maintenance windows. The CRS-1s are typically positioned in the core of the network where bringing down a router for maintenance might have a cascading effect on the network and will, potentially, harm a large number of users. So reaching a hitless upgrade would be an admirable goal. The number of frames we recorded as lost in the test is so minute that we cannot imagine that any service provider should be too concerned about a CRS-1 to CRS-3 upgrade while the network is operational.

Upgrading a moving car’s engine is bound to be more troublesome.

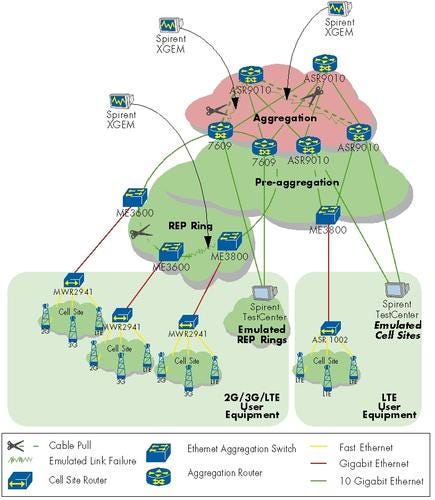

Page 8: Link & Node Resiliency in the IP RAN Backhaul

Summary: In this test, Cisco demonstrated network recovery as quick as 28 milliseconds in the aggregation and 91 milliseconds in the pre-aggregation after a physical link failure. In active failure detection tests, where no physical link was lost, the network recovered in 122 milliseconds in the aggregation and 360 milliseconds in the pre-aggregation. Also, separately, we tested to show a network recovery from a complete Cisco 7609 node failure in under 200 milliseconds.

Producing highly available components and solutions is one of the top goals of telecom manufacturers. The network should be “always on,” as one vendor put it a while ago – however, the reality of service provider requirements is sometimes different. Resiliency should come at a low cost or for free in the RAN due to consumers’ price pressure. Some base station vendors told us that they would rather let a cell site disconnect than invest into expensive redundant connections. Other base stations would cover the area while the failed link is recovering. Well, this worked fine in the voice era – it may not be such a great model for the Internet era where spectrum at each cell site is mostly used for data traffic from 3G or even LTE.

Cisco’s IP Backhaul solution presented to us consists of several tiers of aggregation, each tier employing a different resiliency mechanism – different in functions, performance – and probably cost. In the first tier, at the access, native Ethernet-based resiliency was used: Cisco’s proprietary Resilient Ethernet Protocol (REP), which Cisco claims to provide fast and predictable convergence times in a Layer 2 ring topology. In the second tier, at the aggregation, IP/MPLS was used, and hence an MPLS-based recovery mechanism was employed: Fast Reroute (FRR, RFC 4090).

In the test we used the standard traffic profile as described earlier, emulating 2G, 3G, and LTE end users in parallel. We then simulated three types of failure: a link failure in the REP ring, an MPLS link with FRR-protected services between the 7609 and ASR 9010, and an MPLS FRR-protected link between two ASR 9010s.

For MPLS we could test both failure and restoration – how the network reverts back to normal behavior when the failed link gets back online. In the access resiliency case, Cisco explained that REP does not implement revertive behavior, so we could not test restoration times. The official Cisco explanation was that network operators prefer to wait for a time of their choice to manually switch the traffic direction in their rings. Before we get to the results, there was another complication for this test: What kind of failure would we emulate as a realistic exercise? Sure, in the case that the precious fiber that experienced some traumatic event was directly connected to our active router or switch, the failure would be detected as soon as the Ethernet link goes down. This was the first type of test – the cable was physically removed by hand.

Before we get to the results, there was another complication for this test: What kind of failure would we emulate as a realistic exercise? Sure, in the case that the precious fiber that experienced some traumatic event was directly connected to our active router or switch, the failure would be detected as soon as the Ethernet link goes down. This was the first type of test – the cable was physically removed by hand.

In other, more complex and less fortunate cases however, the failed fiber may be a long-haul one, connected via transmission equipment. In this case, a router or switch would not notice a loss of signal at all, and therefore robust mechanisms are required to detect the data path interruption. This scenario was emulated with the Spirent XGEM, which kept the Ethernet link up and simply dropped all packets in a single direction. To detect this, Cisco used Bidirectional Forwarding Detection (BFD) in the FRR network, and a “fast hello” mechanism within the REP network. Per standard EANTC procedure, any test consisting of a failure event was performed three times for consistency.

Table 3: Link Failure Results

Failed Link | Failure Event | Failure or Restoration? | Failure Times per Iteration (milliseconds) -- Run 1 | Failure Times per Iteration (milliseconds) -- Run 2 | Failure Times per Iteration (milliseconds) -- Run 3 |

ASR 9010 - ASR 9010 | Physical Link Failure | Failure | 36.3 | 30.3 | 28.3 |

ASR 9010 - ASR 9010 | Physical Link Failure | Restoration | 0.0 | 0.0 | 0.0 |

ASR 9010 - ASR 9010 | Emulated Link Failure | Failure | 51.8 | 36.1 | 37.5 |

ASR 9010 - ASR 9010 | Emulated Link Failure | Restoration | 0.0 | 0.0 | 0.0 |

7609 - ASR 9010 | Physical Link Failure | Failure | 27.9 | 17.8 | 27.4 |

7609 - ASR 9010 | Physical Link Failure | Restoration | 0.0 | 0.0 | 0.0 |

7609 - ASR 9010 | Emulated Link Failure | Failure | 119.3 | 121.1 | 109.6 |

7609 - ASR 9010 | Emulated Link Failure | Restoration | 0.0 | 0.0 | 0.0 |

ME 3600 - ME 3600 | Physical Link Failure | Failure | 90.6 | 87.1 | 71.2 |

ME 3600 - ME 3600 | Physical Link Failure | Restoration | Not Tested | Not Tested | Not Tested |

ME 3600 - ME 3800 | Emulated Link Failure | Failure | 357.7 | 216.6 | 191.8 |

ME 3600 - ME 3800 | Emulated Link Failure | Restoration | Not Tested | Not Tested | Not Tested |

The table above reflects the results we recorded. In any given failure, all traffic traversing the failed link was affected, which most often included downstream and upstream traffic, comprising multiple classes. Each cell in the table represents the maximum out-of-service time out of all directions and classes.

With all known factors included, the results met our expectations:

MPLS Fast Reroute usually fails over within less than 50 milliseconds.

Link restoration does not show noticeable packet loss due to an MPLS signaling feature called "make before break."

In the case of REP failure, the recipient (emulated user) from which we took the measurement showed a different value depending on how many nodes down in the ring it was connected – this is the reality of rings.

The test runs where BFD or “fast hello” came into play resulted in failover times of at least two times the control protocol interval, since it takes two missing control messages for the receiver to discover an out-of-service situation.

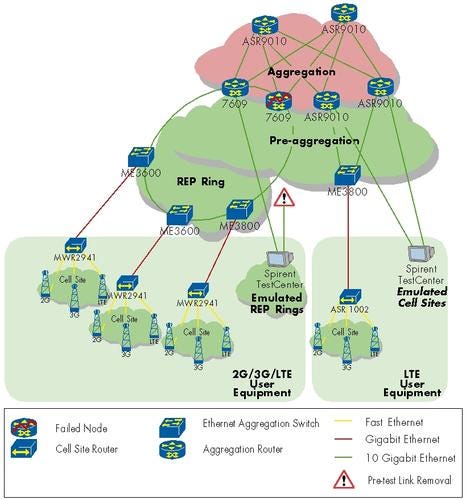

Node resiliency in the IP RAN backhaul

It is possible that an entire network device could suffer from a failure – be it from human error or simple power failure. This test validated whether Cisco’s mobile network solution provided mechanisms to bypass a failed element.

To execute the test, we abruptly shut down all power supplies of a router and watched what happened to the emulated users in the network. Of course, we expected that the rest of the network would continue to function. Specifically, the failed node’s peers had to continue providing identical service levels using Fast Reroute in Cisco’s MPLS-based aggregation network or REP in the pre-aggregation network.

We selected to fail a node at the border between the aggregation and pre-aggregation / access network – the Cisco 7609. Its failure would result in both failure detection and recovery mechanisms to be executed in parallel: MPLS Fast Reroute and REP. Similar to the link failure test, we performed the full test process – measuring out-of-service times for both failure and restoration (powering the device back on) – three times to verify consistency.The combination of Cisco’s network design and the amount of traffic running in the test network meant that any protection scenario was to inherently exceed the remaining network capacity. We took this oversubscription into account when evaluating the results and focused our attention on the high-priority traffic, which should not be affected by the reduced capacity in the network, and was thus expected to only be affected during the failure event. Initial test runs showed results as expected in the downstream direction (traffic destined to the emulated customers and thus traversing the Fast Reroute protected MPLS network first, and following through the REP protected pre-aggregation into the access) of between 170 and 240 milliseconds. With upstream traffic, however, we observed more than a second of out-of-service time, longer than was expected by Cisco. Cisco engineers promptly looked into the issue and found that their Hot Standby Routing Protocol (HSRP) on the 7609 took a bit longer than expected to rewrite the hardware address of the failed router under the circumstances of the traffic load we emulated.

Initial test runs showed results as expected in the downstream direction (traffic destined to the emulated customers and thus traversing the Fast Reroute protected MPLS network first, and following through the REP protected pre-aggregation into the access) of between 170 and 240 milliseconds. With upstream traffic, however, we observed more than a second of out-of-service time, longer than was expected by Cisco. Cisco engineers promptly looked into the issue and found that their Hot Standby Routing Protocol (HSRP) on the 7609 took a bit longer than expected to rewrite the hardware address of the failed router under the circumstances of the traffic load we emulated.

After fixing this issue with a patch, upstream traffic failover times matched that of downstream, as shown in the results. Note: The restoration traffic times measured were only of the MPLS network, as the REP ring was reverted manually before the recovery test run. As mentioned in the link resiliency test, manual restoration is required for the REP protocol on the Cisco ME3600/ME3800 components.

Page 9: All-IP RAN Scale

Summary: We produced nearly 120 Gbit/s of lossless traffic across a series of 12 10-Gigabit Ethernet links traversing Cisco's ASR 9010 and Nexus 7000.

Outside the mobile world it is hard to believe, but the vast majority of mobile backhaul networks still runs on old technologies such as Asynchronous Transfer Mode (ATM) or even older 2-Megabit Time-Division Multiplexing (TDM) networks. In order to handle the explosive growth of 3G data traffic, base station vendors had invented hybrid data offload over IP/Ethernet a while back – but the full move from ATM to packet-based technologies such as Ethernet and IP is only coming with newer versions of 3G technology and Long Term Evolution (LTE) now.

Cisco is, of course, accepting the LTE push with welcoming arms since IP is an area Cisco is particularly comfortable with. At some point in the future, new networks might even be able to shake off the burden of transporting legacy ATM and TDM traffic for older base stations.

In this test case, we agreed to validate Cisco’s LTE-ready backhaul architecture supporting IP services over Ethernet only. No more legacy 2G or 3G equipment would be supported across this infrastructure, so it is suited for LTE-only backhaul deployments. The core element of this all-IP Cisco network design is Cisco’s ASR 9010.

In the test, we emulated a large number of LTE subscribers sending IP traffic from LTE eNodeBs towards the mobile core. The traffic emulation took into account Cisco’s aggregation model and in essence emulated a collection of aggregation switches and IP-only cell site routers. Our goal was to validate whether a pair of ASR9010s could support:

769,000 all-IP users

6,000 all-IP flows

120 Gbit/s full-duplex bandwidth

We leveraged the emulation configuration for realistic user emulation as discussed earlier. The only modification we had to make was to scale our call model slightly further so that the directly connected Nexus 7000 interfaces and the next-hop ASR 9010 would reach line rate. We ran the test for five minutes to ensure that the network was stable with this load.

As a result, we can confirm that 119.46 Gbit/s full-duplex traffic was achieved.

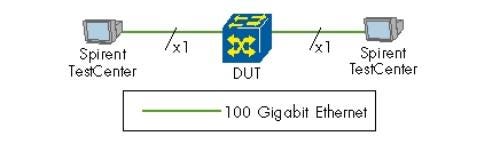

Page 10: 100GbE Throughput

Summary: Cisco's CRS-3 100-Gigabit Ethernet interfaces achieved throughput of 100 percent full line rate for IPv4 packets of 98 bytes and larger, IPv6 packets of 124 bytes and larger, and for Imix packet size mixes for both IPv4 and IPv6.

It is hard to fathom the immense volume and diversity of traffic expected to pass through the core network aggregating 2G, 3G, and LTE mobile users. In any case, with the bandwidth required for LTE, and the effect latency has on the circuit emulation services required for 2G and 3G services over a packet backhaul, the core network will be expected to support very high throughput requirements with low latency.

One of the key components providers will be relying on in order to solve the capacity issue in the backhaul is the introduction of 100-Gigabit Ethernet interfaces into their core networks. Even though the test did not focus on the core network components, we wanted to make sure that when the floodgates of broadband mobile data traffic will truly open, a mobile operator will be able to satisfy all their customers with the capacity needed.

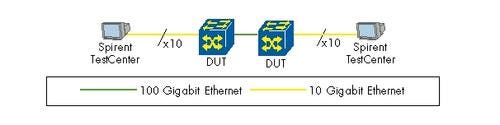

We agreed to perform three types of tests here: IPv4 throughput performance, IPv6 throughput performance, and a mix of IPv6 and IPv4. Just getting the test bed ready for the test was a challenge: C-Form Factor Pluggable (CFP) optics are in high demand, and so are the actual interfaces both for the Cisco device under test and for the Spirent tester.

The 100GbE interface for Cisco’s CRS-3 is not shipping to customers yet - we evaluated pre-production versions. For the CFPs, we waited for the stars to align and managed to get our test bed ready.

The good news is that the CRS-3's 100GbE Ethernet interface is capable of running at full line rate under the smallest uniform frame sizes we tested: 98 bytes for IPv4 and 124 bytes for IPv6. These small sizes require that the hardware processes more than 100 million packets per second, which is no small feat. The rest of the frame sizes we tested - a representative spread of 256, 512, 1024, 1280, and 1518 bytes - also had no problems passing the test at full line rate. (Why did we not choose 64-byte frame sizes for the line rate test? No network runs with empty TCP acknowledgement packets only. Cisco has confirmed to us multiple times in the past that its equipment is optimized for real environments - optimizing it for unrealistic lab tests would be too expensive.)

Our initial test showed that at small frame size the performance of the new interfaces was a few percent points below 100. Cisco investigated and repeated the test with a newer version of the pre-production hardware. The single-sized packet throughput performance was good with the newer hardware: The router reached line rate across all packet sizes evaluated.

In addition to uniform single-sized packet performance evaluation, we carried out a set of tests mimicking a typical Internet traffic mix (referred to as Imix). After all, in the core of the network it is unlikely that a single frame size would dominate the wires.

Instead the good folks at Cooperative Association for Internet Data Analysis (CAIDA) has published packet size distributions from several public exchanges, which allowed us to tailor our Imix to the real world. For IPv4 we used the following mix: 98, 512, 1518 bytes. For IPv6 we replaced the first frame size with 124 bytes. In both profiles we set the traffic weights to favor the smaller frame sizes (more than half of the traffic was small frames), while using only a small porting for the biggest frame sizes.

As a side note, this test showed that the Spirent TestCenter 100-Gigabit Ethernet hardware was very new and cooked al dente. When we tried to configure BGP routing, the Spirent experts blushed and responded that they only support static routes on the 100-Gigabit Ethernet traffic generator at this time. But we were assured they would fix this soon. As a workaround, we had to connect the Spirent TestCenter to the Cisco CRS-3 via ten 10-Gigabit Ethernet interfaces on each side. Through a configuration error we actually configured the tester with the Imix packet sizes mentioned above, plus an additional 18 bytes, such that our IPv4 Imix looked like 116, 530, and 1536 bytes - a valid and legal Imix, but rather unusual. The test passed with flying colors, showing that this IPv4 Imix could reach 100 percent line rate.

Through a configuration error we actually configured the tester with the Imix packet sizes mentioned above, plus an additional 18 bytes, such that our IPv4 Imix looked like 116, 530, and 1536 bytes - a valid and legal Imix, but rather unusual. The test passed with flying colors, showing that this IPv4 Imix could reach 100 percent line rate.

The story was not the same for our modified IPv6 Imix where we incidentally configured a mix of 142, 530, and 1536 bytes frames. We expected the same full line rate results but were unable to reach them. We repeatedly recorded fewer than 40 frames with Ethernet Frame Check Sequence (FCS) errors out of our 3.6 billion frames sent.

Both Spirent and Cisco spent a few weeks in a development lab and finally were able to give us the good news: We uncovered a bug in the hardware during the testing, but fear not, a bug fix has already been created. So we rolled up our sleeves and performed a retest that confirmed that indeed the fix has solved the issue: Now the IPv6 Imix test resulted in full line rate without any checksum errors.

The 100 percent line rate performance of the 100GbE Ethernet interfaces that we tested was one positive result of the test. A second, and perhaps equally impressive, was the dedication that Cisco (and Spirent) showed in getting to the core of the checksum errors issue and providing a timely fix.

Identifying a software or even hardware issue while executing a test is always great for us, because it permits live observations of the vendor's engineering forces in resolving the issue. Cisco did identify and resolve its issue within less than four weeks. Of course, the initial reflex of the vendor was to claim "the test plan is invalid" and "the test equipment is malfunctioning," but, hey, we are used to that across the board.

Page 11: NAT64 Session Setup Rate & Capacity

Summary: This test achieved 40 million NAT64 translations between IPv6 and IPv4 addresses on the CRS-1 platform.

According to Comcast Corp. (Nasdaq: CMCSA, CMCSK), citing the American Registry of Internet Numbers (ARIN), the "exhaustion of the pool of IPv4 addresses will occur sometime between 2011 and 2012. IPv6 is the next-generation protocol that can connect a virtually unlimited number of devices." (See Comcast, ISC Go Open-Source With IPv6.)

Considering that the number of mobile Internet devices is constantly increasing, mobile service providers are facing, or are going to be facing, the IPv4 address depletion issue first before the rest of us will.

Cisco’s solution to the address depletion issue is to use Network Address Translation (NAT). The legacy form of the protocol, sometimes called NAT44, allows masking and aggregation of large private IPv4 address ranges into smaller blocks of public IPv4 addresses.

The newer NAT64 accomplishes the same job, translating between IPv6 and IPv4. Since IPv6 has a vast address space, it is naturally a great candidate for mobile clients as long as IPv4 is still running on currently installed equipment and servers. NAT64 can bridge the gap – which is why we aimed to test it here.

Cisco positioned its NAT engine in the core of the network – in the CRS-1. From a network design perspective this is a reasonable solution. The router at the edge of the mobile operator’s network can be the last point upstream using IPv6 addresses.

Traffic destined to the Internet, where IPv4 is still king, is then translated by the CRS-1 and routed to its destination. This allows the operator to assign real and usable IPv6 addresses to its users’ mobile devices. When the migration to IPv6 is complete, it will simply be a matter of eliminating the NAT64 function and changing the routing to the outside world.

The focus of the test was Cisco’s Carrier-Grade Services Engine (CGSE) modules (installed in the CRS-1 router). The service engine is an integrated multi-CPU module, which offers, among other things, Network Address Translation between IPv6 and IPv4.

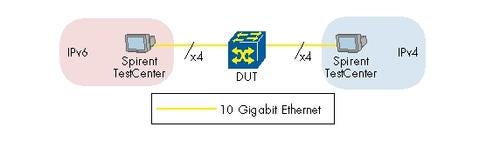

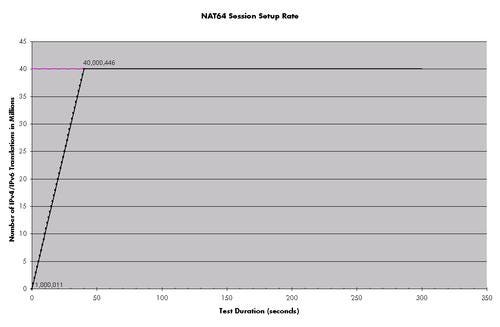

We were told by Cisco that the NAT64 translation feature was stateless, which is why we felt comfortable using Spirent’s TestCenter, a Layer 2-3 traffic generator, to perform the test. Cisco challenged us to validate its claim that each blade supports 20 million translations maintained in parallel and that users could build 1 million connections per second. Two CGSE modules were installed in the CRS-1 chassis; hence our expectations from the NAT64 performance was to be able to translate 40 million unique source and destination pairs.

We defined several tester ports as “client” ports – users with IPv6 IP addresses. Several other ports were configured as IPv4-only servers to which the users were trying to connect. This way we were able to create 40 million unique source and destination pairs that the CGSE-enabled CRS-1 had to convert in order to facilitate end-to-end connectivity. We started off by sending a million unique flows per second through the tester. Since the connections were stateless, we did not have to wait for the TCP setup time and were able to verify that 1 million new connections per second could be established. After 40 seconds we indeed reached our target number of 40 million connections and continued sending traffic for a total of 5 minutes.

We started off by sending a million unique flows per second through the tester. Since the connections were stateless, we did not have to wait for the TCP setup time and were able to verify that 1 million new connections per second could be established. After 40 seconds we indeed reached our target number of 40 million connections and continued sending traffic for a total of 5 minutes. Page 12: NAT64 Module Redundancy

Page 12: NAT64 Module Redundancy

Summary: We demonstrate the recovery from NAT64 module failure occurred within less than 120 milliseconds.

Service providers and vendors alike are familiar with the reality that even the best-engineered hardware can still fail. Cisco plans for component failures by equipping each of its routers with a secondary CGSE module to be used as a backup in the event that the primary fails. CGSE modules are the services modules that are responsible for NAT translations – the focus of this test.

For service providers, installing redundant services cards mean that less line card real estate is available for network interfaces, but a high level of resiliency is possible for the services, with NAT64.Cisco claims that the CGSE supports a “highly available architecture” with line rate accounting and logging of translation information, allowing for a more graceful recovery when a module fails. We decided to test this statement, emulating a failure by removing the active CGSE module from the chassis while at the same time running traffic from the Spirent TestCenter through the CGSE-equipped CRS-1. We derived the out-of-service time from the number of lost frames measured in the network.

The test required a different philosophy than the one traditionally used for failover scenarios in backbones – 50ms recovery is, after all, meaningless to a NAT64 user. As long as the user’s session remains active, the user is unlikely to even realize that a failure has occurred. TCP, for example, is likely to treat a temporary frame loss as a sign of congestion and back off. So the main aspect for the test was to verify that when we ask the CGSE configured for redundancy to support 20 million translations per second, it can still do its job when a failure occurs.

We sent IPv6 traffic destined to IPv4 addresses and traffic back from said IPv4 addresses to the IPv6 hosts. After we verified that the CRS-1 CGSE was properly translating traffic, we removed the active CGSE module. The modules were configured in hot-standby mode, which meant that when we reinserted the module that was removed we expected the router to take note, but not lose any frames. The now active CGSE module was expected to remain active. We monitored in every test run which of the two modules was active and repeated the test for a total of three times.

In each of our failure tests we lost about the same amount of packets: up to 120,000. Given that we sent, over the duration of the test, more than 119 million frames, the worst out-of-service time we measured was 120 milliseconds. During recovery (i.e., when we reinserted the CGSE) we recorded no frame loss.

For service providers the results should be reassuring. Adding a second CGSE module will increase the NAT64 availability, and users will be unlikely to notice that a failover occurred.

— Carsten Rossenhövel, Managing Director; Jambi Ganbar, Project Manager; and Jonathan Morin Senior Test Engineer, European Advanced Networking Test Center AG (EANTC)

Back to Introduction

You May Also Like

_International_Software_Products.jpeg?width=300&auto=webp&quality=80&disable=upscale)