New Heavy Reading report highlights the evolving role of hyperscalers in edge computing deployments and other telco workloads

Heavy Reading's Telcos and Hyperscalers report evaluates the willingness of CSPs to partner with the hyperscalers.

Heavy Reading collaborated with Ericsson, Google Cloud, Intel and Viavi to conduct a survey of 101 global CSPs that have launched edge computing solutions or are planning to do so within 24 months. The aim was to understand how communications service providers (CSPs) and hyperscale cloud providers (HCPs) work together to enable edge computing. One of the drill-downs of the survey was to evaluate the willingness of CSPs to partner with the hyperscalers, specifically at the edge but also for other telco workloads.

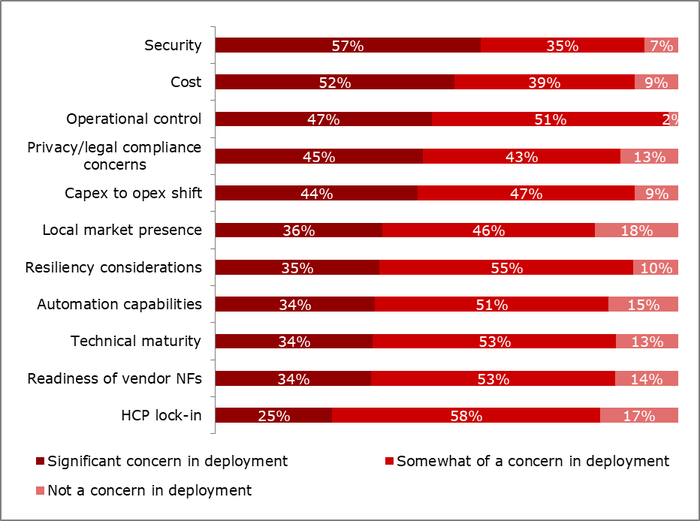

It is clear from the overall survey results that carriers' attitudes toward partnering with the hyperscalers have become more optimistic. However, many perceived challenges remain (see the figure below). Unsurprisingly, "security" and "cost" are the most prevalent concerns, garnering by far the greatest percentages of "significant concern" votes. However, it is the third line item, "operational control," that has the greatest percentage of combined "significant concern" and "somewhat of a concern" votes from a whopping 98% of respondents. Carriers are more comfortable with the resiliency, recoverability and overall performance available with the HCPs, but continue to be unwilling to relinquish control of the network. HCP solutions that enable CSPs to monitor, maintain and scale their own environment and workloads are essential to the success of these joint ventures. As CSPs become more comfortable with working with HCPs, this may change. However, CSPs will always need to retain control of the workload, service or application to ensure that the HCP solution continues to meet both their needs and those of their customers and to give them the ability to change HCPs if need be.

"HCP lock-in" has the smallest percentage of "significant" concern responses, but conversely, it has the greatest percentage of "somewhat of a concern" responses. Heavy Reading explores this issue of HCP lock-in further in the report; it is clear that lock-in is a source of persistent angst among service providers. For now, it is considered a necessary evil to reap the benefits of agility, scalability, lower capex and AI capabilities that the hyperscalers bring to these CSP partnerships. The CSPs would like to add "lower opex "to this list of benefits, paired with more latitude in shifting workloads from one HCP to another ― or even, dare we say it, using multiple HCPs for individual workloads. For now, however, vendor lock-in is on a slow simmer as an issue, and CSPs are resigned to relating specific workloads with specific HCPs.

Loss of control is the most pervasive concern in moving network functions to the HCP cloud

What level of concern do you have about considerations and obstacles that are limiting your deployments of network functions in HCP clouds? (Source: Heavy Reading, 2023)

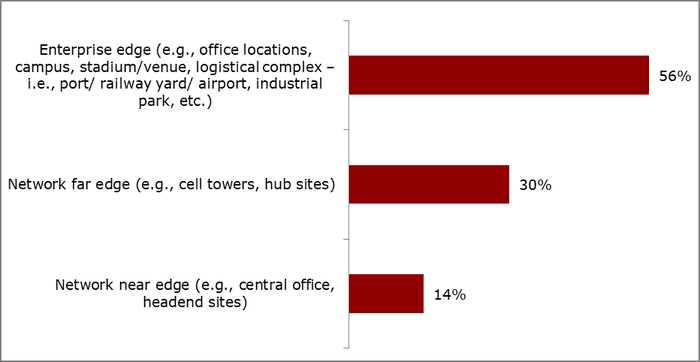

Where on the network continuum are edge deployments taking place?

These concerns reflect the changing demographics of edge computing. Edge computing means different things to different people. When Heavy Reading asked CSPs where they felt edge platforms should be deployed, a majority (56%) pointed to the enterprise edge as the most appropriate location for deployment. This suggests that those surveyed are largely focused on reducing latency, improving overall application performance and/or supporting massive Internet of Things (IoT) implementation. Meanwhile, 30% pointed to the network far edge as their preferred location, and the remaining 14% suggested the network near edge would meet their needs for edge deployments.

Where is the edge?

Q: Where does a managed edge platform make the most sense? (Source: Heavy Reading, 2023)

The percentages above show a shift in how service providers think about their edge deployments. When edge computing implementations first began to emerge roughly four years ago, the bulk of CSP implementations focused on operational efficiencies. The latency targets were about 20ms round-trip delay, which was satisfactory for most use cases at the time but insufficient for AR/VR-enabled applications for either the consumer or enterprise. Instead, the interrelated use cases were intended to provide a relief valve for network pressure during times of traffic stress, keep more traffic local to reduce traffic hair-pinning and reduce traffic volume at the network core. These use cases can be justified with a straightforward ROI analysis. On the other hand, as deployments reach further toward the edge of the network, implementation costs increase along with the number of edge nodes, and the spread creates end-to-end service management challenges. As Heavy Reading's survey results show, CSPs are increasingly likely to turn to the HCPs to assist in scaling their edge services due to the speed and agility with which they can add or remove edge nodes, compute and storage capacity and use cases.

Read the full report for more edge computing insights

We asked respondents about the timeframe of deploying on HCP cloud infrastructure at scale for a variety of telco workloads beyond edge computing, including packet core, cloud RAN, BSS and OSS. It is interesting to note that edge computing did not have the most immediate timeframe. For a description of those results and to gain more in-depth details of service providers' perspectives on edge computing deployment and their hyperscaler partners, download and read the full report now.

This blog is sponsored by Ericsson.

About the Author(s)

You May Also Like