The questionable economics of AI in the RAN

Some companies are toying with radio access networks (RANs) powered by high-performance GPUs like those offered by Nvidia. But others argue there isn't enough demand to warrant the expense.

MWC24 – Barcelona – A new association backed in part by Nvidia is looking to inject AI technology directly into the radio access network (RAN). Doing so might supercharge the speeds available on 5G and eventually 6G.

However, there may be some eye-watering costs involved in this kind of gambit, particularly if it involves Nvidia's Grace Hopper graphical processing unit (GPU) "superchip."

As a result, some companies are arguing that high-performance large language models (LLMs) are unnecessary for an AI-powered RAN. Instead, small language models (SLMs) might be more appropriate – and cheaper.

But there's one unknown factor: What other jobs could computers do at the base of cell towers? If demand materializes for generalized edge computing, there might be a reason to push high-performance, GPU-based computing closer to the edge of the RAN.

Testing AI in the RAN

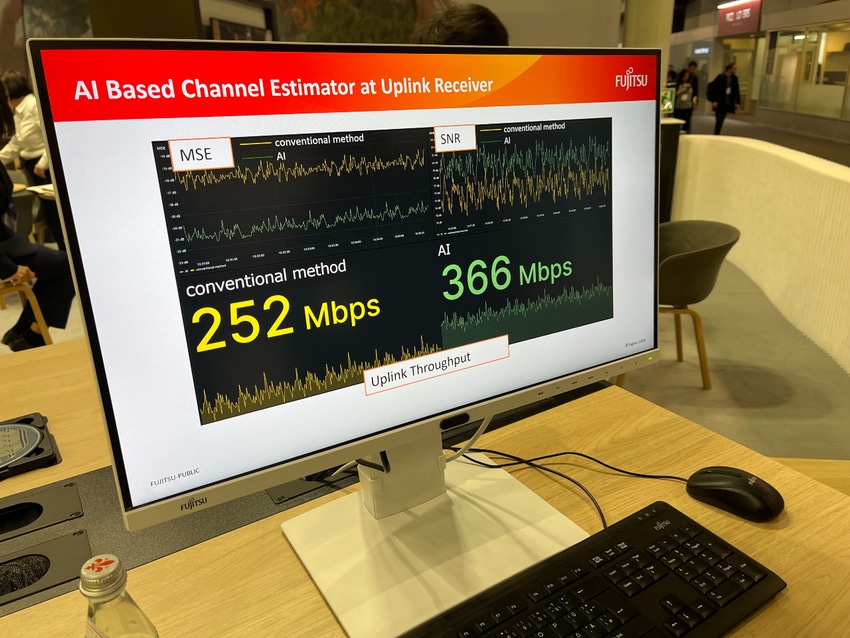

Fujitsu – an up-and-coming RAN vendor – is looking to set itself apart in this area. At its MWC booth, the company is showing off the results of its AI work with Nvidia. Fujitsu essentially supercharged its 5G radios by pairing them with Nvidia's Grace Hopper superchip.

"There's a lot of interest" in AI-powered RAN operations, Fujitsu's Rob Hughes told Light Reading. He said the company used Nvidia's GPUs to run a convolutional neural network in its radio products. Such technology is typically used in an AI scenario to improve a blurry picture. In Fujitsu's MWC demo, it's being used to clean up signal processing. The result is a dramatic improvement in upload speeds, from 252 Mbit/s to 366 Mbit/s.

It's an example of the kinds of performance gains that are possible when companies apply AI technologies into a RAN's radio frequency (RF) environment.

Hughes said Fujitsu's Nvidia-powered radios will be commercially available later this year. Meanwhile, Nokia announced its own partnership with Nvidia this week.

Fujitsu officials explained that the company might be able to achieve similar results using standard central processing units (CPUs) instead of GPUs. However, the company might need to purchase and install 10 times or more CPUs to equal one GPU – a costly approach that would likely require a significant amount of energy. Moreover, Nvidia sells AI management software atop its GPUs that may not be available in CPUs.

The GPU allure

Nvidia's GPUs have taken the AI market by storm. That's because they are viewed as essential to running most advanced AI services. Specifically, they're being deployed in large data centers to help train LLMs like OpenAI's ChatGPT.

Thus, demand for Nvidia's chips is skyrocketing. According to The Wall Street Journal, the company's chips are delivered to customers in armored cars.

As a result, investors have been flocking to Nvidia, sending the company's stock to sky-high levels. Indeed, Nvidia this week surpassed both Amazon and Google's Alphabet in total market capitalization, making Nvidia the third most valuable company in the US.

That's likely why several industry players – including OpenAI CEO Sam Altman – are working to finance Nvidia alternatives.

But such alternatives are expected to take years to emerge, particularly given Nvidia's lead. So, for the foreseeable future, Nvidia is the only supplier of high-performance GPUs for AI services.

The edge and the alternatives

It's not clear whether those GPUs are necessary for AI-powered RAN services.

One reason network operators might invest into RAN-powered GPUs from Nvidia would be to offer speedy AI services at the edge. But this is not a new concept.

As Light Reading has previously reported, edge computing captured the imagination of many in the telecom industry prior to the COVID-19 pandemic. Operators hoped to deploy mini edge computing data centers at the base of their cell towers. By doing so, they could offer speedy, low-latency Internet connections. But traffic demands during the pandemic dulled that interest.

Now, in the age of AI, there's a chance for operators to put Nvidia's GPUs into those edge computing data centers in order to supercharge them with AI capabilities. But some observers are skeptical.

"I can buy the notion that secondary network apps could live there," Disruptive Wireless analyst Dean Bubley wrote in a brief LinkedIn post about edge computing. He argued that GPU-powered RAN sites could support services including security, sensing and programmable radios. "But I'm unconvinced by the mass market cloud/edge pitch," he added.

Moreover, officials with wireless research company InterDigital argued that high-performance AI models may not be needed for RAN operations. Milind Kulkarni, head of the company's wireless labs, said it would not make sense for operators to deploy LLMs in computers at the base of their cell towers.

LLMs like ChatGPT are able to converse about a wide range of topics. Smaller, more targeted AI options – SLMs – can be dedicated to niche AI applications, like those running RAN operations. Kulkarni said that, after they are properly trained, SLMs may not need high-performance computing resources like those provided by Nvidia's GPUs.

Moreover, he said that "sequential learning" technologies could spread the use of AI technologies across both a RAN transmission site and a customer's handset. In that kind of setup, there would be even less demand for high-performance computing resources within the RAN.

Indeed, startup DeepSig this week announced AI-based neural receiver technology, which can run on Intel's chips.

However, Kulkarni said he does see a need for more computing power within the RAN, particularly as the industry moves toward 6G. AI "will change the decisions that we make about 6G," he said.

Read more about:

MWC24About the Author(s)

You May Also Like