ZTE takes on Light Reading's 100GbE router test for traffic forwarding, MPLS, scalability and more

July 7, 2011

As Light Reading regularly points out, fixed and mobile packet networks worldwide are experiencing increases in traffic, driven by video applications, mobile data and business services. (See Planning for 100G & Beyond.)

Carriers, application service providers and content distributors have been waiting for higher-speed packet interfaces to become available in order to increase the limits that have been reached both in terms of router interfaces and router-attached bit-rate per fiber pair. As more and more vendors are beginning to deploy the first generation of 100Gigabit Ethernet (100GbE) interfaces for their flagship routers, European Advanced Networking Test Center AG (EANTC) and Light Reading have set up a public test program aimed to provide operators with an unbiased, empirical view of these new interfaces. In this open test program, EANTC is validating the performance, service scalability and power efficiency of 100GbE router interfaces.

Deployment tidbits were reaching the news just as we sat down with several service providers and asked them for a list of concerns regarding these new, currently cost-intensive, interfaces. (See Verizon Deploys 100G Ethernet in Europe.) We were not surprised to hear that performance for IPv4, IPv6 and multicast was high on the agenda -- new high-speed interfaces often mean new chips and new chips could always have a few forwarding problems. But where service providers focus more these days than ever before are Virtual Private Network (VPN) services scalability and power efficiency: Having a bunch of super-fast interfaces in the backbone is going to boost the provider’s operations only when revenue-generating services are supported using these new interfaces. Keeping the power consumption down has also become a mandatory requirement as power accounts for 25 percent of all operational cost in some networks.

We took this set of requirements and created a test plan that we intended to apply to all vendors participating in the program. Vendors also had the opportunity to define two of their own tests in addition, at our approval.

Together with Ixia (Nasdaq: XXIA), our tester vendor of choice for this program, we prepared the test equipment for the scale and breadth of our testing, and then executed the tests at each vendor’s lab. The results will be presented in a series of articles right here on Light Reading -- one for each vendor that goes through the tests. As you'll see in this first test in our series, ZTE's core router is as fast as any other on the market, and our test proved it to be reliable and scalable as well.

Test Contents

Page 2: ZTE's Solution

Page 3: Ixia's Test Equipment

Page 6: MPLS Traffic Differentiation

Page 7: Services Scalability: VPLS

Page 9: Transport Scalability: RSVP-TE

Page 10: Energy Efficiency

Page 11: Route Balancing

Page 12: Link Aggregation

Page 13: Conclusion

About EANTC

The European Advanced Networking Test Center (EANTC) is an independent test lab founded in 1991 and based in Berlin, conducting vendor-neutral proof of concept and acceptance tests for service providers, governments and large enterprises. EANTC has been testing MPLS routers, measuring performance and interoperability, for nearly a decade at the request of industry publications and service providers.

EANTC’s role in this program was to define the test topics in detail, communicate with the vendors, coordinate with the test equipment vendor (Ixia) and conduct the tests at the vendors’ locations. EANTC engineered, then extensively documented the results. Vendors participating in the campaign had to submit their products to a rigorous test in a controlled environment contractually defined. For this independent test, EANTC exclusively reported to Light Reading. The vendors participating in the test did not review the individual reports before their release. Each vendor had a right to veto publication of the test results as a whole, but not to veto individual test cases.

— Carsten Rossenhövel is Managing Director of the European Advanced Networking Test Center AG (EANTC) , anindependent test lab in Berlin. EANTC offers vendor-neutral network testservices for manufacturers, service providers, governments and largeenterprises.Carsten heads EANTC's manufacturer testing and certification group andinteroperability test events. He has over 20 years of experience indata networks and testing.

Jonathan Morin, EANTC, managed the project, worked with vendors and co-authored the article.

With annual sales north of $70 billion and more than 80,000 employees, ZTE Corp. (Shenzhen: 000063; Hong Kong: 0763) is one of the world's largest telecom equipment vendors, making almost everything from handsets to core routers. EANTC has been working with ZTE for several years now, almost exclusively in our Multiprotocol Label Switching (MPLS) and Carrier Ethernet multi-vendor interoperability events. Increasingly, we have been seeing ZTE’s name appear in short lists for service provider tenders and Light Reading articles. As far as giants go, ZTE slowly and consistently made its way into the international arena and now stands tall amongst the international group of players in the service provider router and switch market. (See ZTE Increases Annual Sales by 17%.) We were not surprised when ZTE accepted our invitation to join the 100GbE test program, quickly prepared for the testing and hosted our team in their Nanjing facilities in China. Not only did the testing provide a unique insight into the quality of ZTE’s core router, company character and dedication, it was also a pleasant opportunity to explore the Chinese culture and culinary delights.

We were greeted by a competent team of engineers who were well-versed in core and edge routing/MPLS platforms, fueled by 100Gigabit Ethernet interfaces. The router brought forth by ZTE to the test was the ZXR10 M6000-16, a full rack router with two sets of 11 slots of which we only needed 6. The model names for the 10GbE and 100GbE cards respectively are 04XG-SFP+S and 01CGE-CFP-S, with Reflex Photonics Inc. 's LR4 CFP used in the 100GbE card.

We were greeted by a competent team of engineers who were well-versed in core and edge routing/MPLS platforms, fueled by 100Gigabit Ethernet interfaces. The router brought forth by ZTE to the test was the ZXR10 M6000-16, a full rack router with two sets of 11 slots of which we only needed 6. The model names for the 10GbE and 100GbE cards respectively are 04XG-SFP+S and 01CGE-CFP-S, with Reflex Photonics Inc. 's LR4 CFP used in the 100GbE card.

ZTE explained that the ZXR10 M6000-16 configuration we tested is also marketed as a configuration of the ZXR10 T8000 using identical hardware and software. According to ZTE, the results presented in our article should fit both routers. As ZTE mentioned, if one would upgrade a previous version of the ZXR10 M6000-16 or T8000 to use 100GbE, one would need the interface itself and an updated switching fabric -- the current install base of chassis are otherwise prepared. For optics ZTE used Reflex Photonics LR4 CFPs, although they told us they are currently working on building their own. ZTE chipsets, ZTE optics, ZTE routers -- vertical integration to a degree you rarely see in this industry.

What kind of a performance test could we conduct without appropriate load generators and traffic analyzers? We worked closely with Ixia Communications to ensure that our scalability goals were reached while meeting our No. 1 goal of testing services realistically.

It's worth reminding those who don’t use these tools day to day, that long passed are the days of so-called "packet blasting." While still an important baseline test, it is surely no longer sufficient to only look at forwarding capacity. Thankfully, IxNetwork -- the software we used for all of the tests -- allowed us to emulate more realistic scenarios. MPLS services testing requires intelligence in the tester to represent a virtual network with hundreds of nodes. IxNetwork calculates the resulting traffic to be sent to the device under test across the directly attached interfaces -- including signaling and routing protocol data as well as emulated customer data frames. This way, a reasonably complex environment with hundreds of VPNs and tens of thousands of subscribers can be emulated in a representative and reproducible way. For the 100Gigabit Ethernet test interface we used Ixia's K2 HSE100GETSP1, alongside a pair of their 8-port 10Gigabit Ethernet cards all running in an XM12 chassis. The software used was IxNetwork version 5.70.352.8, IxOS version 6.00.700.3.

For the 100Gigabit Ethernet test interface we used Ixia's K2 HSE100GETSP1, alongside a pair of their 8-port 10Gigabit Ethernet cards all running in an XM12 chassis. The software used was IxNetwork version 5.70.352.8, IxOS version 6.00.700.3.

EXECUTIVE SUMMARY

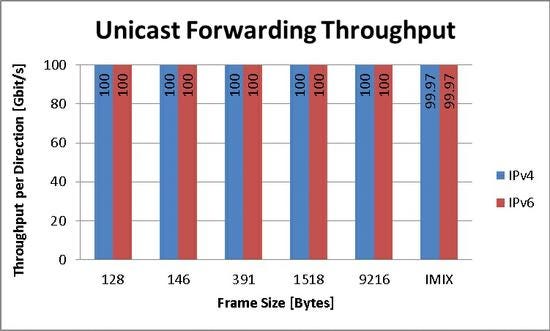

The ZTE ZXR10 M6000-16 passed our performance tests for 100 percent of line rate, for a range of IPv4 frame sizes. The same achievement was made for IPv6 traffic following a firmware upgrade on the router.

Core and aggregation routers are expected to master a wide range of advanced tasks today. At the same time, however, basic data throughput remains a mandatory performance criteria.

That’s why measuring the throughput performance of an interface is commonly agreed on as a very good place to start. It allows us to characterize the design of the solution by pushing its limits in terms of forwarding speed, lookups, and memory usage. RFC2544 has provided a standard methodology for such tests since 1999. It is a convenient tool kit especially since it is so well accepted by vendors and implemented by test equipment vendors. The flip side of the coin is that the RFC is not adapted to today’s requirements.

So we supplemented some additional parameters that our vendor customers had to adapt to. One such parameter is a mix of frame sizes -- sometimes known as an Internet Mix (IMIX) -- representative of the most-used frame sizes seen online, according to the folks at Cooperative Association for Internet Data Analysis (CAIDA) . Our IMIX consisted of the following weights: nine parts of 64-byte frames, two parts of 576-byte frames, four parts of 1400-byte frames and three parts of 1500-byte frames. For IPv6, we used nine parts of 84-Byte frames instead of 64-byte frames. We also added large frame size (9216 bytes) and non-word aligned frames size of 391 bytes, just to make sure that we hit a couple of nasty situations the 100GbE interface would have to deal with in reality. And let's not forget that for added bonus points we requested ZTE to repeat all the tests with IPv6 traffic -- the future is just around the corner and service providers installing this monster of a router are not likely to be looking for a forklift upgrade in the next five to eight years. IPv6 was a must. The goal of the test was simple enough (no routing protocols used) and did not diverge from the original intention of the RFC -- blast the router with line rate bidirectional traffic and verify that no frames are lost or that no suspicious high latency is recorded. And this is exactly what we did.

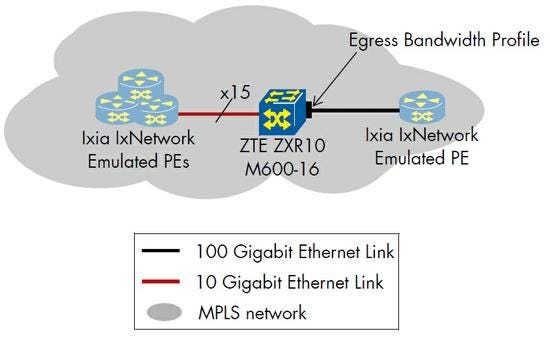

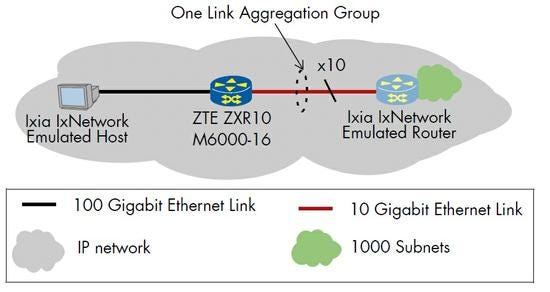

Using the test setup shown above, we sent bidirectional line rate traffic between a set of ten 10Gigabit Ethernet links and a single 100Gigabit Ethernet link. We ran this test for 60 seconds for each of the frame sizes tested. Not a single frame was dropped. After the tests were executed with IPv4 traffic, ZTE reloaded the router with new firmware -- the version number was the same but with a newer build -- and we repeated the tests with IPv6 traffic. Again, no traffic was dropped. ZTE abstained from performing tests of simultaneous IPv4 and IPv6 traffic. The tester in fact transmitted a maximum of 99.97 percent of line rate when using IMIX traffic due to the way packet streams were crafted based on our configuration settings. No packet was dropped in any test run.

EXECUTIVE SUMMARY

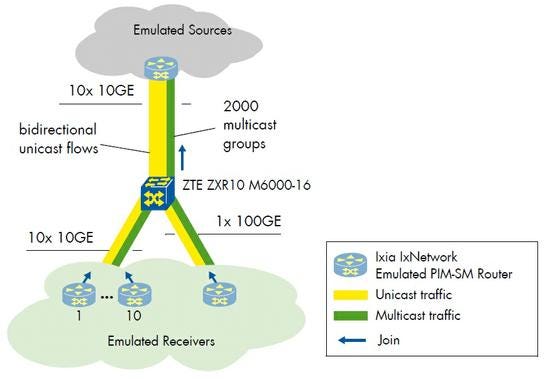

We verified that the ZXR10 M6000-16 can properly forward a realistic 70/30 mix of unicast and multicast traffic at wirespeed across a 100GbE interface at frame sizes ranging from 128 to 9,216 bytes.

After getting that baseline “feeling” for the unicast capabilities of the 100GbE line card, we moved on to the next item on our list -- multicast replication. Multicast is these days essential to both residential and business services. It is used for financial transactions in trading houses and data distribution for military simulations, and is the primary method to provide million of customers with broadcast television content over IP networks.

But processing multicast traffic is more complex than forwarding unicast traffic. Here the router has to maintain state information regarding the receivers to which traffic should be headed, as well as maintain a distribution tree that determines the flow direction. Since the router actually has to make multiple copies of the same incoming packet and distribute it to multiple outgoing ports it is logical that the replication would be done on the line cards egressing the router as opposed to the line cards on which the packets are received. Why? Simple router design economics -- if replication happens on the ingress card, the backplane capacity could be over-subscribed when a large number of egress cards have multicast receivers. So the safest approach is to replicate the traffic on the egress interface.

Our test plan defined a topology using Protocol Independent Multicast – Sparse Mode (PIM-SM) as many core networks do. The ZXR10 M6000-16 and one 100GbE interface and twenty 10GbE interfaces were installed. The ZXR10 M6000-16 was configured as a Rendezvous Point (RP), which is exactly what it sounds like using English inference -- traffic has to go through this device. The Ixia emulated static multicast sources behind ten of the twenty 10GbE interfaces. The other ten 10GbE interfaces and the 100GbE interface were then emulating PIM-SM routers sending PIM join messages toward the RP. We emulated 2000 multicast groups in total, 200 groups per transmit port. We executed a Multicast throughput performance test according to RFC 3918. Unless used solely for multicast applications, networks today generally have quite little multicast traffic in comparison to unicast. Therefore we chose a multicast-to-unicast ratio of 30:70 -- 30 percent of traffic on the receiving interfaces was multicast, 70 percent was unicast. Frame sizes (in bytes) tested include: 128, 146, 391, 1518, 9216 and IMIX. After joins were sent, 100 percent of traffic of a single frame size (plus the IMIX case) was transmitted at 60 seconds each. In all cases, all frames were forwarded appropriately, and PIM-SM sessions were not disrupted. The test confirmed that the ZXR10 M6000-16 can properly forward a mix of unicast and multicast traffic at each of these frame sizes.

We executed a Multicast throughput performance test according to RFC 3918. Unless used solely for multicast applications, networks today generally have quite little multicast traffic in comparison to unicast. Therefore we chose a multicast-to-unicast ratio of 30:70 -- 30 percent of traffic on the receiving interfaces was multicast, 70 percent was unicast. Frame sizes (in bytes) tested include: 128, 146, 391, 1518, 9216 and IMIX. After joins were sent, 100 percent of traffic of a single frame size (plus the IMIX case) was transmitted at 60 seconds each. In all cases, all frames were forwarded appropriately, and PIM-SM sessions were not disrupted. The test confirmed that the ZXR10 M6000-16 can properly forward a mix of unicast and multicast traffic at each of these frame sizes.

EXECUTIVE SUMMARY

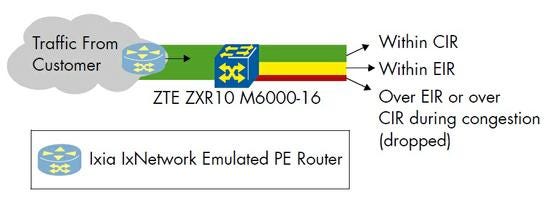

The ZTE ZXR10 M6000-16 successfully shaped and prioritized traffic of multiple bandwidth profiles in parallel based on predefined committed and excess information rates (CIR/EIR).

In order to support today’s converged networks routers must prioritize certain traffic flows over others. This ability requires the router to implement special memory used for queuing the frames, some processors that are dedicated to per-packet processing calculations and algorithms that can make real-time decisions for frame handling. That’s most certainly not a trivial task and at 100GbE speeds the problem gets intensified. In addition, such quality-of-service (QoS) tests are hindered by the fact that there is no common precise language for bandwidth profile and packet handling configuration in the IP industry. The logic of the tests, however, is solid. Service providers are not looking for constant upgrades in their network -- the 100GbE solutions going into a providers network in 2011 should still be useful in 2016. It is not different from the days in which 10GbE interfaces came into the market -- for a brief second the industry and operators believed that the bandwidth constraints were relieved. But that euphoric feeling lasted only a year or two. Traffic prioritization is a must in today’s network especially given the nature of cloud and mobile applications of the future. And with that move (note Apple’s recent iCloud announcement) the data volume traversing the pipes will increase and increase. Even with infinite amount of MP3s sitting in the cloud each and every one of us would still like to run cheap calls using voice over IP. Well, traffic differentiation is required to accomplish just that. Our test case focused on an egress profile within an MPLS network. LSPs were established for each direction between the emulated PEs -- from the fifteen 10Gigabit Ethernet links to the 100Gigabit Ethernet link (thirty LSPs total). Two bandwidth profiles were defined, one color aware, and one color blind. Each customer had Committed Information Rate (CIR) values defined. One customer was capped off at this committed rate, while the other had also an Excess Information Rate (EIR) -- an “if it's available” bandwidth allocation, allowed for use if not used by other customers. ZTE abstained from the steps verifying Committed Burst Size (CBS) and Excess Burst Size (EBS) parameters.

Our test case focused on an egress profile within an MPLS network. LSPs were established for each direction between the emulated PEs -- from the fifteen 10Gigabit Ethernet links to the 100Gigabit Ethernet link (thirty LSPs total). Two bandwidth profiles were defined, one color aware, and one color blind. Each customer had Committed Information Rate (CIR) values defined. One customer was capped off at this committed rate, while the other had also an Excess Information Rate (EIR) -- an “if it's available” bandwidth allocation, allowed for use if not used by other customers. ZTE abstained from the steps verifying Committed Burst Size (CBS) and Excess Burst Size (EBS) parameters. First, we checked that no traffic was dropped when each customer sent 95 percent of their CIR limit. Then, we increased traffic for the customer who had an EIR of 0, and verified that traffic above the CIR was dropped. As a third step, we sent traffic above the CIR for the customer who did have an EIR, verifying that coloring remained intact and no traffic was dropped. Finally, we verified that in the case of congestion, each customer was given their respective CIR rates, and only traffic within the EIR range was dropped. The policies worked effectively indeed, and ZTE’s ZXR10 M6000-16 passed this test.

First, we checked that no traffic was dropped when each customer sent 95 percent of their CIR limit. Then, we increased traffic for the customer who had an EIR of 0, and verified that traffic above the CIR was dropped. As a third step, we sent traffic above the CIR for the customer who did have an EIR, verifying that coloring remained intact and no traffic was dropped. Finally, we verified that in the case of congestion, each customer was given their respective CIR rates, and only traffic within the EIR range was dropped. The policies worked effectively indeed, and ZTE’s ZXR10 M6000-16 passed this test.

EXECUTIVE SUMMARY

ZTE’s ZXR10 M6000-16 successfully forwarded traffic to 500,000 MAC addresses learned across 500 emulated customers with VPLS services.

Running base line tests as discussed in the previous three sections is a starting point. After evaluating the results and satisfying ourselves with the knowledge that the 100GbE interface ZTE brought to the test is a capable solution for IPv4 and IPv6 forwarding as well as multicast and traffic prioritization we were ready to move on to test revenue-generating services, such as Layer 2 Ethernet services at scale.

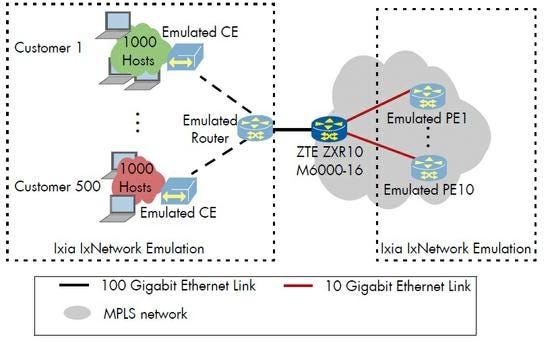

These days, 100GbE interfaces are a significant investment even for large operators. Not only are the interfaces dear, but the CFPs are priced at bleeding-edge amounts -- until one bleeds. One common way to recoup this investment quickly is to terminate a large number of business services on the system working as an MPLS Provider Edge (PE) router facing the aggregation network. Today, the service interfaces are typically 10Gigabit Ethernet ports connected downstream. We therefore challenged ZTE to support a high, but realistic, number of Ethernet multipoint VPN services using Virtual Private LAN Services (VPLS). The services were to be terminated on the 10Gigabit Ethernet interfaces of the ZXR10 M6000-16, while the 100Gigabit Ethernet interface would accumulate the MPLS traffic toward the core network.

VPLS is no spring chicken; the set of protocols has been standardized by the IETF more than four years ago. The idea behind VPLS is rather elegant -- turn a wide area, service provider network into a huge family of virtual Ethernet switches providing isolated VPN services. The routers at the edge of the network must learn customer MAC addresses from their directly connected customer facing ports and connect to other customer ports, over the network, using MPLS pseudowires. Each customer is aggregated into a construct called Virtual Switch Instance (VSI) which separates one customer MAC table from another. When an unknown frames ingresses one of the routers participating in a VPLS, it is flooded just like in any Ethernet switch to the other VPLS members. We set a realistic network consisting of 500 customers all attached to the ZXR10 M6000-16 over the 100GbE with our tester both generating customer traffic (each customer had 1,000 active users or MAC addresses) and emulating other Provider Edge routers participating in our 500 VPLS domains. Before sending end-to-end traffic, we first transmitted “learning” traffic -- transmitting from all source MAC addresses so the router may store them in memory. We then sent bidirectional end-to-end traffic at various loads -- 10 percent, 50 percent, 90 percent and 98 percent of line rate -- for two minutes each. All test runs experienced no packet loss.

We set a realistic network consisting of 500 customers all attached to the ZXR10 M6000-16 over the 100GbE with our tester both generating customer traffic (each customer had 1,000 active users or MAC addresses) and emulating other Provider Edge routers participating in our 500 VPLS domains. Before sending end-to-end traffic, we first transmitted “learning” traffic -- transmitting from all source MAC addresses so the router may store them in memory. We then sent bidirectional end-to-end traffic at various loads -- 10 percent, 50 percent, 90 percent and 98 percent of line rate -- for two minutes each. All test runs experienced no packet loss.

EXECUTIVE SUMMARY

Scaled IP VPN services are a reality for the ZTE ZXR10 M6000-16, successfully forwarding traffic close to wire-speed to 500,000 IP routes across 500 IPv4-based emulated enterprise customers.

Side-by-side with Carrier Ethernet services, a significant percentage of operators’ business services revenue still comes from managed IP VPNs. Known in the standards as BGP/MPLS VPNs, this service is cognizant of the customers’ IP network. Many enterprises take advantage of outsourcing the network provisioning and maintenance, as well as add-on services that can sometimes be more easily added on in comparison with Ethernet-based services such as those related to multicast and video. We wanted to help operators answer this question: Is ZTE’s 100GbE-enabled router ready to be used in large-scale IP VPN services situations?

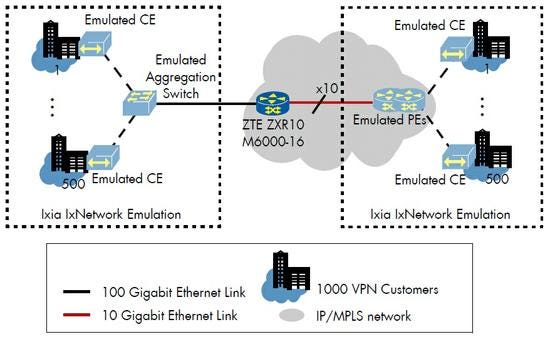

This test case follows a similar concept as compared with the VPLS test above. We emulated 500 business customers, each with 1,000 IPv4 customer routes to learn via eBGP on the customer interface. All customers had one emulated site directly connected “locally” on the ZXR10 M6000-16’s 100GbE interface, half of them also had sites on one group of five emulated PEs, and the other half had sites on another group of five. The local customer interfaces were differentiated on the 100GbE interface based on VLAN tags. ZTE abstained from including IPv6 customers, so all customers were IPv4 based. After all routes were learned, we ran traffic at different loads -- 90 percent and 98 percent -- for two minutes each. No packets were dropped. EXECUTIVE SUMMARY

EXECUTIVE SUMMARY

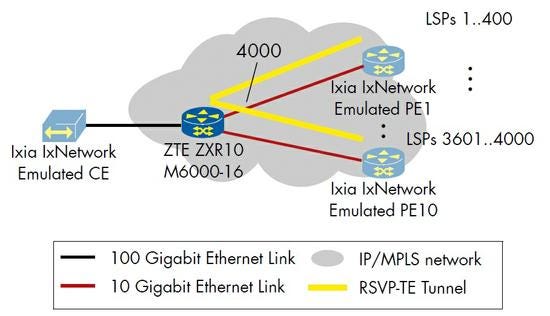

The ZXR10 M6000-16’s 100GbE interface scaled up to 4,000 RSVP-TE tunnels.

ZTE sees their customers requiring 100Gigabit Ethernet today for their core and backbone networks, however they are also cognizant of their customers’ future needs for 100GbE within the aggregation and metro networks as well. Wherever operators choose to deploy ZTE’s ZXR10 M6000-16, in most or even all cases they will somehow use the Resource Reservation Protocol and its Traffic Engineering extensions (RSVP-TE). Allowing operators to build MPLS-based traffic engineering tunnels (LSPs) across their network, and to dedicate bandwidth resources, RSVP-TE is crucial.

The goal of this test was to verify, independently from a full service configuration, that RSVP-TE tunnels could scale on the ZXR10 M6000-16. In order to establish 4,000 RSVP-TE tunnels between the ZXR10 M6000-16 and the ten Ixia emulated PEs we established 200 per emulated PE, per direction. Since no services were established over these tunnels, we allowed the vendor to map traffic to the LSPs how they saw fit. ZTE mapped the tunnels to IP subnets, which we included in our traffic flows. We then executed two-minute tests for a series of frame sizes -- 395 Bytes, 1522 Bytes, and IMIX -- at 98 percent of line rate each. Once again, all traffic was forwarded without any issues arising. While RSVP-TE is a base feature, this test establishes confidence that it can indeed scale on the ZXR10 M6000-16. EXECUTIVE SUMMARY

EXECUTIVE SUMMARY

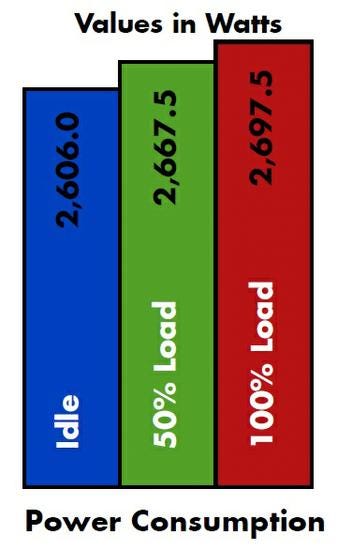

ZTE’s 22 slot core router loaded with four three-port 10GE line cards and one 100GE line card forwarded 196 Gbit/s of traffic while consuming 2697.5 Watts of power, yielding 13.76 Watt/Gigabit.

Operators have a dual goal when it comes to energy efficiency: lower power bills and satisfying environmental requirements either set by the government or their “green”-crazy marketing department. ZTE had some interesting insight here. From their experience, their service provider customers are particularly focusing on the latter -- lowering carbon emissions. Given their home market, this is not too much of a surprise. The most stringent requirements are typically set on access devices, but core routers are still a piece of the story. At a more practical level, ZTE also sees operators looking to expand without having to build new facilities -- setting requirements relating to the space and power available at their current facility.

Power measurements are not easily comparable. The context must be considered. The ZXR10 M6000-16 is a 22-slot router consuming almost an entire 19-inch rack of space. It is not your grandma’s router. In our test, four three-port 10Gigabit Ethernet interface line cards were used in order to have ten ports total (two ports in the last card were not needed. All ten interfaces were aggregated to the 100Gigabit Ethernet interface -- a fifth line card. We ran traffic bidirectionally for 20 minutes at 50 percent of line rate, then again for 20 minutes at 100 percent of line rate, and left the device idle (we did not transmit traffic with the Ixia) for 20 minutes. Just before the end of each 20-minute period we measured the power required by the router. For 0 percent, 50 percent, and 100 percent load respectively the router drew 2,606 watts, 2,667.5 watts, and 2,697.5 watts. This fell in line with our expectations for a normal core router, and should provide a good baseline measurement for operators looking to install this router. EXECUTIVE SUMMARY

EXECUTIVE SUMMARY

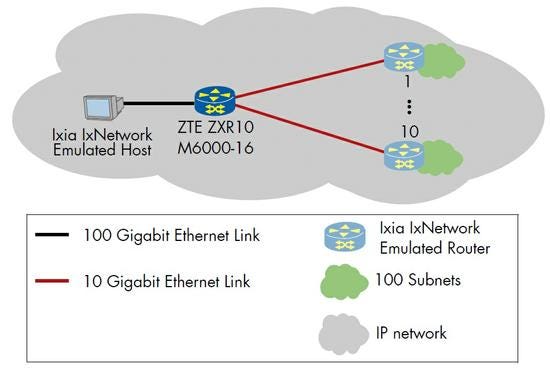

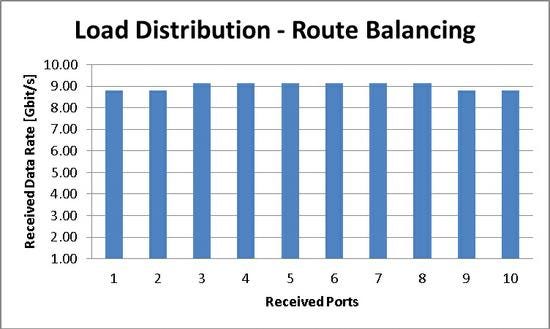

The ZXR10 M6000-16 transmitted 180 Gigabits per second of bidirectional IPv4 traffic relatively evenly from a single 100Gigabit Ethernet link across ten 10Gigabit Ethernet links.

We asked ZTE how their system differentiates from the competition. One aspect is that it uses ZTE’s own chipsets developed for the purpose. Another is what ZTE calls their unified platform -- the fact that ZXR10 M6000-16 line cards can be used in a ZXR10 T8000 and vice versa. In our tests, we gave vendors an opportunity to suggest additional test cases where they believe they excel, which brings us to another area in which ZTE sees itself to be strong -- traffic distribution. ZTE’s tests of choice relate to an even distribution of traffic loads across physical links, the first of which is IP based.

In their test of IP route balancing, ZTE took advantage of our previous setups, which used ten 10Gigabit Ethernet links and one 100Gigabit Ethernet link connected to the Ixia. Where connected to the 100GbE interface, Ixia’s IxNetwork application emulated a single IP host. IxNetwork then emulated a router running IS-IS where connected to each of the ten 10GbE interfaces, advertising 100 routes each. All emulated routers advertised the same 100 routes. The challenge for the ZXR10 M6000-16 was to equally distribute the traffic coming from the IP host destined to the 100 emulated routes. To verify, we configured a bidirectional traffic stream between the emulated IP host at the 100GE interface and the 100 emulated routes at each of the ten emulated routers. First and foremost, we observed no packet loss. All frames were forwarded appropriately. Additionally, ZTE wanted to demonstrate even load sharing across the links. To quantify this, we averaged out the number of frames sent in total using the number of expected ports. ZTE expected that no single 10Gigabit Ethernet link load would deviate more than 5 percent from the average. We measured that the maximum deviation remained within 4.01 percent. The graph below shows the load per 10Gigabit Ethernet port.

To verify, we configured a bidirectional traffic stream between the emulated IP host at the 100GE interface and the 100 emulated routes at each of the ten emulated routers. First and foremost, we observed no packet loss. All frames were forwarded appropriately. Additionally, ZTE wanted to demonstrate even load sharing across the links. To quantify this, we averaged out the number of frames sent in total using the number of expected ports. ZTE expected that no single 10Gigabit Ethernet link load would deviate more than 5 percent from the average. We measured that the maximum deviation remained within 4.01 percent. The graph below shows the load per 10Gigabit Ethernet port. EXECUTIVE SUMMARY

EXECUTIVE SUMMARY

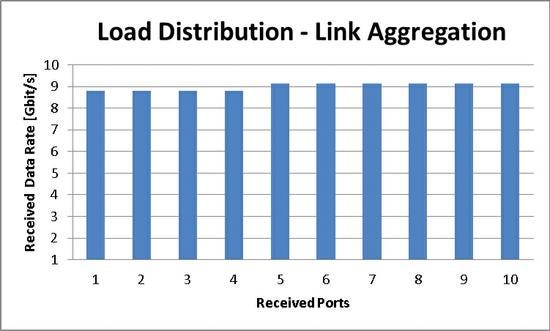

The ZXR10 M6000-16 transmitted 180 Gigabits per second of bidirectional Ethernet services traffic relatively evenly from a 100Gigabit Ethernet link to a Link Aggregation Group (LAG) of 10 Gigabit Ethernet.

ZTE’s second additional test of choice verified ZTE’s implementation of traffic distribution on the Ethernet layer. We transmitted traffic from a 100GbE interface to ten 10GbE interfaces using an Ethernet-layer Link Aggregation Group (LAG) and measured how evenly the load was distributed by the ZXR10 M6000-16. In fact, the test methodology was almost identical to the previous IP layer load balancing test case; the only difference was disabling IS-IS on the 10Gigabit Ethernet interfaces and instead enabling Link Aggregation Control Protocol (LACP). ZTE expected that no single 10Gigabit Ethernet link would deviate more than 5 percent from the average of the 10 ports. Indeed the port that deviated the most had 4 percent more traffic than the average of the ten ports. The results -- the loads for each port -- are shown in the graph below.

In fact, the test methodology was almost identical to the previous IP layer load balancing test case; the only difference was disabling IS-IS on the 10Gigabit Ethernet interfaces and instead enabling Link Aggregation Control Protocol (LACP). ZTE expected that no single 10Gigabit Ethernet link would deviate more than 5 percent from the average of the 10 ports. Indeed the port that deviated the most had 4 percent more traffic than the average of the ten ports. The results -- the loads for each port -- are shown in the graph below. All in all, ZTE took to the challenges of the test quite well. Unicast and multicast performance results were indeed impressive. It's worth noting to the readers who noticed that latency values were not included; this was not without reason. We opted to avoid presenting this test as a bake-off where readers try to decide which solution is objectively "the best" -- particularly with the latest obsession over the race to breaking barriers to provide "ultra low latency" services. Such services are absolutely required, especially for cloud services, but were not the focus of this test. Our scalability tests (VPLS, Layer 3 VPN, RSVP-TE) were designed to measure that latency did not significantly increase as services were scaled, and this was indeed verified. Finally, we also saw that services not only scaled, but the solution could differentiate traffic appropriately and even provide a variety of load distribution mechanisms. We hope this article provides insight and helps to set the appropriate expectations for those looking into ZTE's 100GbE enabled solution. As a closing statement, designed to annoy those struggling to afford and implement 100Gigabit Ethernet, we are looking forward to tests of Terabit Ethernet!

All in all, ZTE took to the challenges of the test quite well. Unicast and multicast performance results were indeed impressive. It's worth noting to the readers who noticed that latency values were not included; this was not without reason. We opted to avoid presenting this test as a bake-off where readers try to decide which solution is objectively "the best" -- particularly with the latest obsession over the race to breaking barriers to provide "ultra low latency" services. Such services are absolutely required, especially for cloud services, but were not the focus of this test. Our scalability tests (VPLS, Layer 3 VPN, RSVP-TE) were designed to measure that latency did not significantly increase as services were scaled, and this was indeed verified. Finally, we also saw that services not only scaled, but the solution could differentiate traffic appropriately and even provide a variety of load distribution mechanisms. We hope this article provides insight and helps to set the appropriate expectations for those looking into ZTE's 100GbE enabled solution. As a closing statement, designed to annoy those struggling to afford and implement 100Gigabit Ethernet, we are looking forward to tests of Terabit Ethernet!

— Carsten Rossenhövel is Managing Director of the European Advanced Networking Test Center AG (EANTC) , anindependent test lab in Berlin. EANTC offers vendor-neutral network testservices for manufacturers, service providers, governments and largeenterprises.Carsten heads EANTC's manufacturer testing and certification group andinteroperability test events. He has over 20 years of experience indata networks and testing.

Jonathan Morin, EANTC, managed the project, worked with vendors and co-authored the article.

You May Also Like