The second in our series of 100GbE tests puts the Alcatel-Lucent 7750 SR-12 through its paces

July 14, 2011

Welcome to the continuation of our open test program aimed at validating the performance, service scalability and power efficiency of 100Gigabit Ethernet (100GbE) router interfaces. As more and more vendors are beginning to deploy the first generation of 100GbE interfaces for their flagship routers, European Advanced Networking Test Center AG (EANTC) and Light Reading have set up a public test program aimed to provide operators with an unbiased view of these new interfaces.

As was the case in our most recent test, we took an up-to-date mix of carrier network requirements and created a test plan that we intended to apply to all vendors participating in the program. Vendors also had the opportunity to define two of their own tests in addition, at our approval.

With the help of testing vendor Ixia, then performed agreed-upon tests at each vendor’s lab. The results will be presented in a series of articles right here on Light Reading -- one for each vendor who goes through the tests.

This report will recap the results for Alcatel-Lucent (NYSE: ALU), our latest test participant. Alcatel-Lucent's 7750 SR-12 has been around for some time and the company showed that adding 100GbE interfaces doesn't change much about the features operators are already familiar with. Alcatel-Lucent sometimes has their own way of doing things, but as you'll read they ably took on the challenge of this test.

The contents of this report are as follows:

Test Contents

Page 2: Alcatel-Lucent's Solution

Page 5: MPLS Traffic Differentiation

Page 6: Services Scalability: VPLS

Page 8: Transport Scalability: RSVP-TE

Page 9: Energy Efficiency

Page 10: Scaled Services

Page 11: 400Gbit/s Link Aggregation

Page 12: Conclusion

Testing Info

We worked closely with Ixia Communications to ensure thatour scalability goals were reached while meeting our No. 1goal of testing services realistically. It's also worthreminding those who don’t use these tools day to day, thatlong passed are the days of so-called "packet blasting."Thankfully, IxNetwork -- the software we used for all of thetests -- allowed us to emulate more realistic scenarios.MPLS services testing requires intelligence in the tester torepresent a virtual network with hundreds of nodes.IxNetwork calculates the resulting traffic to be sent to thedevice under test across the directly attached interfaces --including signaling and routing protocol data as well asemulated customer data frames. This way, a reasonablycomplex environment with hundreds of VPNs and tens ofthousands of subscribers can be emulated in a representativeand reproducible way. For the 100Gigabit Ethernet testinterface we used Ixia's K2 HSE100GETSP1. The software usedwas IxNetwork version 5.70.120.14, IxOS version 6.00.700.3.

About EANTC

The European Advanced Networking Test Center (EANTC) is an independent test lab founded in 1991 and based in Berlin, conducting vendor-neutral proof of concept and acceptance tests for service providers, governments and large enterprises. EANTC has been testing MPLS routers, measuring performance and interoperability, for nearly a decade at the request of industry publications and service providers.

EANTC’s role in this program was to define the test topics in detail, communicate with the vendors, coordinate with the test equipment vendor (Ixia) and conduct the tests at the vendors’ locations. EANTC engineered, then extensively documented the results. Vendors participating in the campaign had to submit their products to a rigorous test in a controlled environment contractually defined. For this independent test, EANTC exclusively reported to Light Reading. The vendors participating in the test did not review the individual reports before their release. Each vendor had a right to veto publication of the test results as a whole, but not to veto individual test cases.

— Carsten Rossenhövel is Managing Director of the European Advanced Networking Test Center AG (EANTC) , anindependent test lab in Berlin. EANTC offers vendor-neutral network testservices for manufacturers, service providers, governments and largeenterprises.Carsten heads EANTC's manufacturer testing and certification group andinteroperability test events. He has over 20 years of experience indata networks and testing.

Jonathan Morin, EANTC, managed the project, worked with vendors and co-authored the article.

Initially, we designed our test program with core routers in mind. Since 2003 the Alcatel-Lucent 7750 Service Router (SR) has become the flagship product of the IP division and is no stranger to the industry, focusing primarily on edge deployments. Alcatel-Lucent chose the 7750 SR-12 for the tests, citing its versatile functionality, scalability and deployment models, which included everything from video contribution networks to having its place within Internet exchange points. As the name implies, the “service router” focuses on services with heavy edge functionality, but the router did not have any problems to fulfill the roles designed in some of the tests for the core of the network. Alcatel-Lucent also mentioned that they indeed see deployments of the product in core, aggregation, and edge network areas. A recent LRTV interview adds some details and gives a nice overview of Alcatel-Lucent's capabilities:

{videoembed|209415}The 100Gbit/s architecture is of course just as much a question of the interface line card as it is of the chassis, if not more so. For the tests, Alcatel-Lucent used their 100Gig-capable version of their Integrated Media Module (IMM) cards -- one with a single 100GbE interface and one with 12 10GbE interfaces (oversubscribed). They explained that the same network processor is used in both the 100GbE IMM card and the 10GbE IMM card and both are 100Gbit/s ready. The code version running on the router throughout the tests was TiMOS-C-8.0.R9. We were told that operators who already have a 7750 SR-12 in their network can upgrade to 100GbE by only purchasing the 100GbE interface card and potentially also a new power supply. The optics used in this test were of the LR10 type, but Alcatel-Lucent explained this was based on availability and that they support LR4 CFPs in their 100GbE interface card as well.  EXECUTIVE SUMMARY

EXECUTIVE SUMMARY

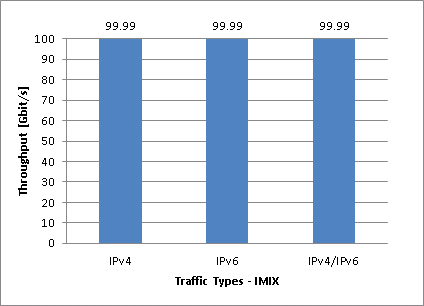

Alcatel-Lucent demonstrated performance indicative of line rate for IMIX traffic of IPv4, IPv6 and a mix of both.

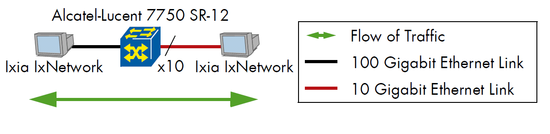

At EANTC we treat forwarding performance as the first item to get to know the router a bit better. A good baseline, especially for new high-speed interfaces, is the forwarding performance. The test methodology, described in a legacy yet established Request For Comment document (RFC 2544), provides a methodology for measuring the forwarding performance of the network interface hardware. Our intention was to use the RFC methodology, which cycles through a series of frame sizes, for this evaluative purpose and use an Internet Mix (IMIX) traffic definition for the remainder of the tests. Alcatel-Lucent stated that their common practice with customer tests is to use only Internet Mix (IMIX) traffic for such performance tests. We therefore ran the unicast forwarding test with our IMIX definition, which consisted of the following weights: nine parts of 64-byte frames, two parts of 576-byte frames, four parts of 1,400-byte frames and three parts of 1,500-byte frames. For IPv6, we used nine parts of 84-Byte frames instead of 64-byte frames. As the most straight forward test setup amongst our tests, 10 10GbE interfaces and one 100GbE interface were connected directly to the Ixia (Nasdaq: XXIA) traffic generator. Bidirectional IP traffic flows were statically configured in “pairs” between the 10GbE ports and the 100GbE port, without routing protocols enabled. With the recent pandemonium over IPv4 addresses running out we know operators are looking more closely at IPv6 as a base requirement -- and for good reason. We repeated the test three times: once with IPv4 traffic, once with only IPv6 at wire-speed, and once with a mix of 70 percent IPv4 and 30 percent IPv6 packets. For all three iterations, traffic was run at 99.99 percent for 60 seconds.

As the most straight forward test setup amongst our tests, 10 10GbE interfaces and one 100GbE interface were connected directly to the Ixia (Nasdaq: XXIA) traffic generator. Bidirectional IP traffic flows were statically configured in “pairs” between the 10GbE ports and the 100GbE port, without routing protocols enabled. With the recent pandemonium over IPv4 addresses running out we know operators are looking more closely at IPv6 as a base requirement -- and for good reason. We repeated the test three times: once with IPv4 traffic, once with only IPv6 at wire-speed, and once with a mix of 70 percent IPv4 and 30 percent IPv6 packets. For all three iterations, traffic was run at 99.99 percent for 60 seconds. No packet drops were experienced for any of the test runs. For Alcatel-Lucent this was a good first move -- the router and the 100GbE interface functioned as expected under a traffic load that was close to realistic. We were now comfortable that testing could progress with more advanced scenarios.

No packet drops were experienced for any of the test runs. For Alcatel-Lucent this was a good first move -- the router and the 100GbE interface functioned as expected under a traffic load that was close to realistic. We were now comfortable that testing could progress with more advanced scenarios.

EXECUTIVE SUMMARY

The replication of 2,000 IPv4 multicast groups 100 times, when also mixed with unicast traffic, was verified, while also reaching line rate with almost no issues.

Having verified that the 100GbE interfaces can perform in the absolutely fundamental and essential role of forwarding unicast packets, we moved on to evaluating the performance of the cards when replicating multicast traffic. These days multicast is almost synonymous with IPTV as an efficient distribution method for TV over IP, but multicast is also used in financial trading, video production and the research world.

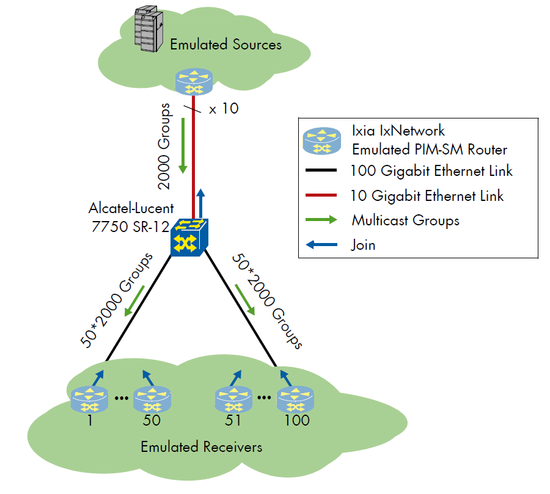

With some exceptions, such as dedicated video contribution networks, multicast still accounts for a small percentage of traffic. We therefore planned a mix of 70 percent unicast and 30 percent multicast traffic to be emulated -- a more-than-modest proportion of multicast traffic, but still realistic. It was difficult to say no when Alcatel-Lucent wanted to then up the ante to an opposite ratio -- 70 percent multicast traffic and only 30 percent unicast traffic. They explained how the setup is similar to some broadcast network deployments of Alcatel-Lucent’s customers.

Multicast testing often starts with topology discussions -- where should the receivers be positioned and where should the source be? For this test we wanted to make sure that the replication engine installed on the 100GbE is getting a proper workout. We designed the test topology in such a way that our multicast sources would be attached to the 10GbE interfaces of the device under test and the receivers, in this case PIM neighbors, would be attached to 100GbE and 10GbE receiving interfaces. Our test plan called for a single 100GbE interface plus ten 10GbE interfaces to act as receivers, as we kept in mind that more than one 100GbE interface might be hard to come by. Alcatel-Lucent had some extras available, and replaced the ten 10GbE receiver interfaces with a second 100GbE. We did not see a reason to object. Per our test plan, 2,000 unique multicast groups were sourced across the 10GbE interfaces (200 on each). Alcatel-Lucent opted to further increase the requirements of the original plan by not only emulating a single downstream router on each of the two physical 100GbE interfaces (equating to 11 in our plan = one per each 10GbE, plus one on the 100GbE) but rather 50. Each of the 50 downstream PIM-SM-enabled emulated routers on each 100GbE interface transmitted PIM-SM “joins” to the router under test.

Per our test plan, 2,000 unique multicast groups were sourced across the 10GbE interfaces (200 on each). Alcatel-Lucent opted to further increase the requirements of the original plan by not only emulating a single downstream router on each of the two physical 100GbE interfaces (equating to 11 in our plan = one per each 10GbE, plus one on the 100GbE) but rather 50. Each of the 50 downstream PIM-SM-enabled emulated routers on each 100GbE interface transmitted PIM-SM “joins” to the router under test.

After sending all joins we ran traffic for two minutes and measured the loss. We found that the first burst of traffic -- appearing from the Ixia tester to be under a second -- was initially lost. The total loss for the entire run was a fraction of a percentage point (under 0.247%) and the Ixia tester indicated that this loss was only at the initial burst -- as soon as traffic started the loss number showed immediately and did not seem to increase (counters of transit traffic are not exactly precise until the test stops). Alcatel-Lucent explained that this was expected, and not an issue in deployments as multicast “joins” are a bit more staggered. Unicast traffic experienced no loss for either direction.

EXECUTIVE SUMMARY

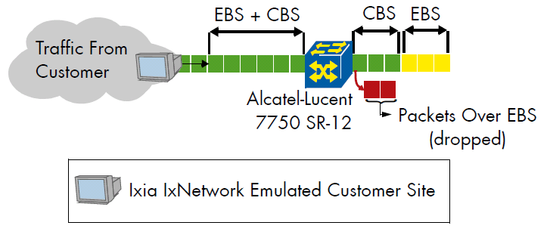

Traffic differentiation from the 100GbE card was verified, ensuring that the appropriate traffic, out of multiple customers with different bandwidth profiles each with multiple classes, is recolored past its committed rate and burst limits, and dropped past the peak rate and burst limits.

Traffic prioritization is the third building block for healthy router operation. These days, when networks simultaneously support multiple service types ranging from residential and business customers to mobile backhaul, IPTV and VoIP, being able to prioritize certain business or mission critical packets over other packets when network resources are starved is essential to business continuity. Alcatel-Lucent confirmed that their service provider customers have indeed learned from history that the bandwidth surplus from 100GbE interfaces will not last forever and that they are planning for differentiated services from the get-go.

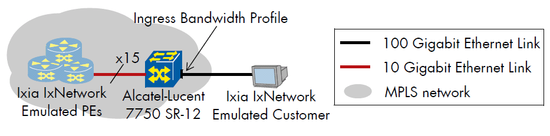

The task is less than trivial. The router must first be able to distinguish between the packets, then assign them to different queues and then process them according to their importance. The work actually does not begin with the router, it rather starts with the network designer, who has a variety of tools at his or her disposal to plan for these potential congestion scenarios. Schedulers, policers, shapers and prioritization algorithms are all involved for the purpose of treating the most important packets (read: most revenue generating) with diligence and speed, while allowing other packets to be slightly delayed or even dropped. Our task was to design a realistic test scenario that will enable us to verify that this complex functionality described above really works. And just for added bonus points, complexity and endless hours of conference calls, every vendor tends to understand traffic prioritization exactly as his router implements it. This was no easy test. The test focused mostly on rate and burst policing, while still including a step involving congestion. Alcatel-Lucent explained that they consider policing at an egress profile a corner case, and explained that conducting the test from an ingress profile would be more representative of their deployments. Since our original setup placed the 100GbE at the egress where we planned for congestion and policing, we had to now swap the setup to make the 100GbE the ingress port to ensure that the 100GbE card remained as the focus of the test.

The test focused mostly on rate and burst policing, while still including a step involving congestion. Alcatel-Lucent explained that they consider policing at an egress profile a corner case, and explained that conducting the test from an ingress profile would be more representative of their deployments. Since our original setup placed the 100GbE at the egress where we planned for congestion and policing, we had to now swap the setup to make the 100GbE the ingress port to ensure that the 100GbE card remained as the focus of the test.

We went on to verify that the 7750 SR-12 properly enforced Committed Information Rates (CIR) and Excess Information Rate (EIR) for multiple customers in parallel. Basically, CIR is what a customer is promised no matter what, and EIR is bandwidth they may use if the network is not congested and can afford it. First, we checked that no traffic was dropped when each customer sent 95 percent of their CIR limit. Then, we increased traffic for the customer who had an EIR of 0, and verified that traffic above the CIR was dropped. As a third step, we sent traffic above the CIR for the customer who did have an EIR, verifying that coloring remained in tact and no traffic was dropped. Since ingress policing was tested, the congestion step originally planned no longer made sense. Finally, we verified the burst policing -- Committed Burst Size (CBS) and Excess Burst Size (EBS) -- ensuring that the network would never see bursts past a given size, and would recolor bursts that approached but did not reach that size. The policies worked effectively indeed. The diagram also shows what this looked like for additional clarity. EXECUTIVE SUMMARY

EXECUTIVE SUMMARY

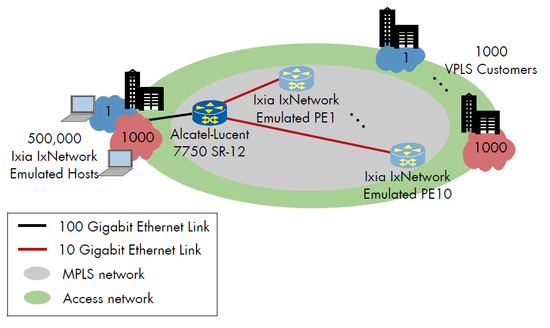

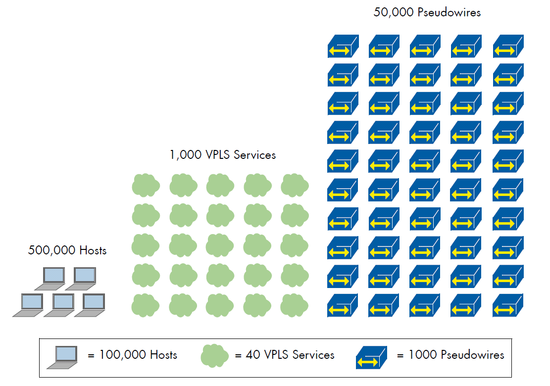

Carrier-grade Layer 2 services from the 7750 SR-12’s new 100Gigabit Ethernet interface were verified including a total of 500,000 emulated hosts from 1,000 VPLS customers.

Based on the results we collected in our unicast, multicast and traffic prioritization tests, we had a good feel for the performance of the router. But simple traffic forwarding, replication and prioritization functions are not sufficient in today’s networks. Networks must generate revenues and revenues are generally generated by selling services. We therefore turned our attention to the services one could offer using the 100GbE interfaces. Virtual Private LAN Service (VPLS) is one such service. It enables service providers to offer business customers a service that turns the wide area network into a LAN.

An organization with multiple locations purchasing such a service would, theoretically, be sitting on the same local area network even when the offices are geographically dispersed. Such services are also standardized by the Metro Ethernet Forum under the terms E-LAN and EVP-LAN depending on the level of transparency the operator provides. The router supporting VPLS services must also be able to learn MAC addresses both from locally and remotely attached customers for a given service. It also must establish Ethernet pseudowires to all other routers on which the customer is attached and maintain a virtual service instance (VSI) for each customer -- a heavy workload. Our test plan aimed to mimic a realistic yet scaled scenario, calling to establish 1,000 VPLS services, each with 500 emulated hosts (500 MAC addresses, or 500,000 total). This also meant a considerable amount of pseudowires, given that the remote sites were spread across 10 peer PE routers emulated by the Ixia. To keep things realistic, each customer had sites attached to five of the ten remote PEs, but for the sake of the test obviously all had locally attached sites (sites attached to the 7750 SR-12). This meant a total of 50,000 pseudowires were maintained simultaneously. In each test, “learning” traffic was first sent -- populating the MAC tables so the router knew where all MAC addresses were sourced. We then ran several two-minute tests, each running IMIX traffic at different percentages of line including 10 percent, 50 percent, 90 percent and 98 percent to ensure issue free stability at various loads. In all tests the router did not drop any frames, proving that scaled and realistic VPLS services are indeed not an issue for the 7750 SR-12.

Our test plan aimed to mimic a realistic yet scaled scenario, calling to establish 1,000 VPLS services, each with 500 emulated hosts (500 MAC addresses, or 500,000 total). This also meant a considerable amount of pseudowires, given that the remote sites were spread across 10 peer PE routers emulated by the Ixia. To keep things realistic, each customer had sites attached to five of the ten remote PEs, but for the sake of the test obviously all had locally attached sites (sites attached to the 7750 SR-12). This meant a total of 50,000 pseudowires were maintained simultaneously. In each test, “learning” traffic was first sent -- populating the MAC tables so the router knew where all MAC addresses were sourced. We then ran several two-minute tests, each running IMIX traffic at different percentages of line including 10 percent, 50 percent, 90 percent and 98 percent to ensure issue free stability at various loads. In all tests the router did not drop any frames, proving that scaled and realistic VPLS services are indeed not an issue for the 7750 SR-12. EXECUTIVE SUMMARY

EXECUTIVE SUMMARY

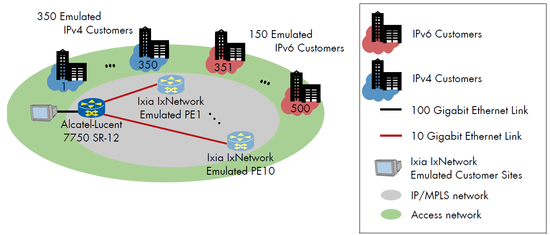

Layer 3 Virtual Private Networks were verified with 350 IPv4-based customers and 150 IPv6-based customers each with 1,000 internal subnets to be learned and forwarded to by means of Alcatel-Lucent’s 100Gigabit Ethernet interface.

The second type of service we set to verify was the popularly deployed BGP/MPLS virtual private network (VPN) service. Also known as a Layer 3 VPN (L3VPN), such services have been operated by many service providers since the late '90s. As opposed to VPLS the customers are attached to the network using IP connectivity, but the principal operation is similar to VPLS -- multiple customer sites are attached to the service provider network that is in turn responsible to connect all these sites to each other.

The challenge here for the router is somewhat different from VPLS though. The router must maintain connectivity with the customer by routing a protocol (in our case BGP). On top of this control plane connectivity, the router must distribute the learned routes from the customer to the other routers participating in the VPN. And last, but most certainly not least, the router must forward packets to their correct destinations. These requirements translate to a rather large control and forwarding plane exercise: Each Virtual Routing and Forwarding Instance (VRF) “belongs” to a customer and must learn and install a number of routes in hardware. Based on the number of customers attached to the router, and the number of routes they advertise, the total number of routes could bring a router to its knees. Out test was designed in such a way that we always maintained a realistic number of routes (1,000 per customer), VRFs (500 total customers), and a realistic number of sites to which a customer was attached (six total -- the local site on the 7750 SR-12 and five remote sites connected to emulated PEs). We also required that 30 percent of the VPNs were for IPv6 networks. After all routes were advertised, we sent IMIX traffic for two minutes at 90 percent of load and two minutes at 98 percent of load, making sure to hit all routes for all customers in both directions. Again, no surprises here, all packets were forwarded appropriately, proving that the Layer 3 VPN services familiar to the 7750 SR-12 are the same services it knows just as well when connected with a 100Gigabit Ethernet interface.

Out test was designed in such a way that we always maintained a realistic number of routes (1,000 per customer), VRFs (500 total customers), and a realistic number of sites to which a customer was attached (six total -- the local site on the 7750 SR-12 and five remote sites connected to emulated PEs). We also required that 30 percent of the VPNs were for IPv6 networks. After all routes were advertised, we sent IMIX traffic for two minutes at 90 percent of load and two minutes at 98 percent of load, making sure to hit all routes for all customers in both directions. Again, no surprises here, all packets were forwarded appropriately, proving that the Layer 3 VPN services familiar to the 7750 SR-12 are the same services it knows just as well when connected with a 100Gigabit Ethernet interface.

EXECUTIVE SUMMARY

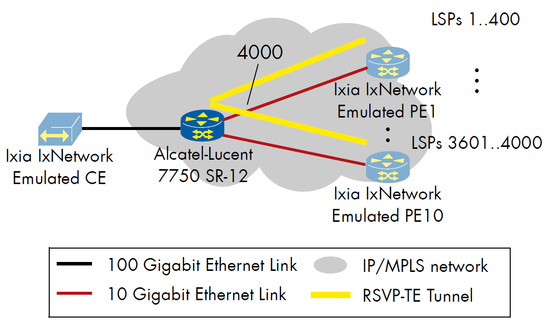

The base function for building MPLS services -- Label Switched Paths (LSPs) -- scales indeed, at least up to 4,000.

A 100GbE-enabled router could find itself in the core of the network. In such a deployment scenario the router would have to support Multiprotocol Label Switching (MPLS) operations such as label swapping and learning routes from its neighbors. Trivial task you say? Hardly. In the core of the network the router would need to support a large number of Label Switched Paths (LSPs) and to perform its label swapping operations at interface speed. The next test set is to verify the ability of the router to support a large number of LSPs. A basic configuration, however we’re familiar with the fact that most operators often like to test specific tools individually -- eliminating variables -- before bringing on a full-scale test with all services enabled.

We established 4,000 MPLS “tunnels” (LSPs) between the 7750 SR-12 and the ten Ixia emulated PEs (200 for each, in each direction). It is not a completely foreign concept for operators to use raw RSVP-TE tunnels for traffic engineering without services associated to them, but it is indeed rare. We therefore did not make strict requirements for how traffic was mapped to LSPs. Alcatel-Lucent mapped them to IP subnets, which we included in our traffic flows. We sent these flows at a total of 98 percent of line rate bidirectionally, again for two minutes. Wrapping up the set of three scalability tests, we once again found no major issues in the 7750 SR-12’s ability to scale these well-known tools. EXECUTIVE SUMMARY

EXECUTIVE SUMMARY

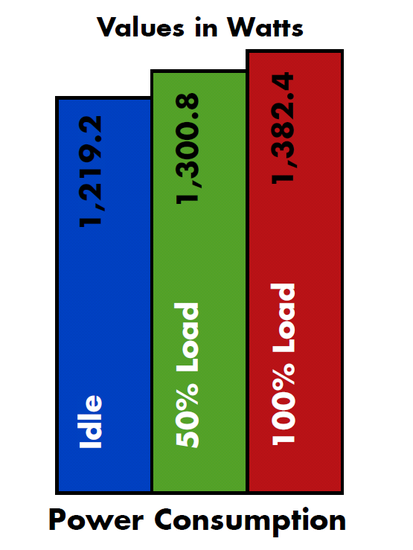

Running the 7750 SR-12 at 100 percent load, running it at 50 percent load and letting it idle, equipped with three fans and two control plane cards, yielded power consumption values of 1382.4, 1300.8 and 1219.2 Watts respectively.

Green telecom, generally, means less power, and less power means a less expensive power bill. Standards bodies defining testing methodologies have specifically intended to keep the story this simple, aiming to answer the question: What is the cost (where cost is measured in terms of power) per bit transferred? Power concerns are particularly more prevalent at this point on data center network nodes, given the complexity and high set of requirements that operators have on their core network elements, but this is starting to change. Power is still power, and cost is still cost. If a vendor can make a bottom-line difference to an operator, it is a selling point.

When we originally wrote the test plan in early 2010, there were two standards being developed -- one by the Alliance for Telecommunications Industry Solutions (ATIS) and one in parallel the Energy Consumption Rating (ECR) Initiative. We therefore stuck with a straightforward methodology using ideas from each. The vendor could first chose a configuration from one of the previous tests -- in this case we used the VPLS test setup. Using that setup, we simply repeated the test at 50 percent load, at 100 percent load, then measured afterward with no traffic (0 percent load), each for twenty minutes.  It's easy to start comparing such numbers amongst equipment, but its more accurate, albeit more involved, to keep in mind that different vendors build completely different architectures, each with different strengths. Apples to oranges to kiwi. Alcatel-Lucent’s 7750 SR-12 is geared toward the service edge, not to mention was equipped with two CPM3s (card used for control plane and as a switching fabric), 1 PEM (power supply), and three cooling fans.

It's easy to start comparing such numbers amongst equipment, but its more accurate, albeit more involved, to keep in mind that different vendors build completely different architectures, each with different strengths. Apples to oranges to kiwi. Alcatel-Lucent’s 7750 SR-12 is geared toward the service edge, not to mention was equipped with two CPM3s (card used for control plane and as a switching fabric), 1 PEM (power supply), and three cooling fans.

EXECUTIVE SUMMARY

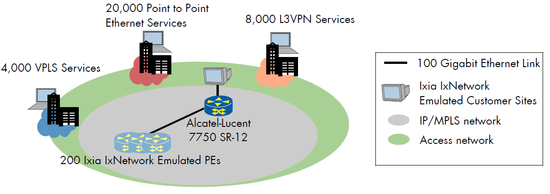

The 7750 SR-12 was verified to support 32,000 customer services configured in parallel, while Alcatel-Lucent demonstrated the hardware resources required.

Up until now all tests in this report have been defined by EANTC. One of our goals was to have a repeatable set of standard tests. Another one of our goals was to provide the vendor with a platform to display their added value. Where would they chose to be differentiated? This the first of two of such tests.

According to Alcatel-Lucent, their solution differentiates from others on the market through a high level of integration on the chipset, the product's versatile applicability across the network, and the ability to integrate IP-based and optical Dense Wavelength-Division Multiplexing (DWDM) network areas. Alcatel-Lucent explained that its main goal with this test was to use as many internal chipset resources as possible, and show that services remained intact -- a bit of an artificial white box perspective to some extent.

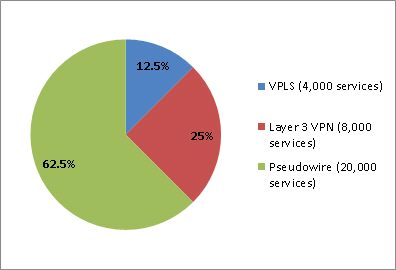

Let's break things down one step at a time. There were two main pieces -- configuring and sending traffic for 32,000 services of different types; and, at the same time, differentiating traffic based on class of service. The sheer number of services had us asking questions, but Alcatel-Lucent explained that they have deployment examples of such high service requirements including one case where 40,000 VPLS instances were required. The physical topology consisted of two 100Gigabit Ethernet links connected between the Ixia gear and the 7750 SR-12 -- one emulating a customer-facing link, and the other the network facing link. All services on the customer side were differentiated using “Q-in-Q." The tester generated traffic with two VLAN tags each with an Ethertype of 8100. Of the 32,000 services, 8,000 were Layer 3 BGP VPNs, 4,000 were VPLS and 20,000 were point-to-point Ethernet pseudowires.

We ran traffic for five straight hours without losing a single packet. We configured the tester to send proportional amount of traffic per service according to the service weights: 25 Gbit/s of Layer 3 VPN traffic, 62.5 Gbit/s of Ethernet Pseudowire traffic and 12.5 Gbit/s of VPLS traffic -- all for a total of 100 Gbit/s. The Ixia traffic generator was configured to send, within every customer flow, all eight possible PCP bits. The end result was that the 100GbE link supported a total of 32,000 different services, and all eight possible classes of service. The Alcatel-Lucent 7750 SR-12 was configured to obviously support all 32,000 services. In addition, Alcatel-Lucent engineers configured policers on the system (matching each of our PCP classes) and applied the configuration to each customer. According to Alcatel-Lucent each customer had a dedicated queue in which its traffic was treated. A second set of policers then spread the eight classes across two arbiters, ensuring that no two classes exceed 2 Gbit/s, and the top/last level policy ensured that no single service (customer) exceeded 10 Gbit/s.

The Alcatel-Lucent 7750 SR-12 was configured to obviously support all 32,000 services. In addition, Alcatel-Lucent engineers configured policers on the system (matching each of our PCP classes) and applied the configuration to each customer. According to Alcatel-Lucent each customer had a dedicated queue in which its traffic was treated. A second set of policers then spread the eight classes across two arbiters, ensuring that no two classes exceed 2 Gbit/s, and the top/last level policy ensured that no single service (customer) exceeded 10 Gbit/s.  Alcatel-Lucent's engineers then sat us down and showed us, using the CLI, the resource utilization on the system. We noted that on the card used in the test many resources were used, and in another card, that was idling much more resources were available. We could not, however, confirm that the policies were being enforced since no single class of service or customer was oversubscribed and the values reported above were never reached. What we could verify was that counters were constantly increasing for each policer per class, for each arbiter, for each service policy, for each of the 32,000 customers, showing resource numbers on the CLI that equated to:

Alcatel-Lucent's engineers then sat us down and showed us, using the CLI, the resource utilization on the system. We noted that on the card used in the test many resources were used, and in another card, that was idling much more resources were available. We could not, however, confirm that the policies were being enforced since no single class of service or customer was oversubscribed and the values reported above were never reached. What we could verify was that counters were constantly increasing for each policer per class, for each arbiter, for each service policy, for each of the 32,000 customers, showing resource numbers on the CLI that equated to:

Alcatel-Lucent explained how they wanted to show that the resources are dynamically available -- ready to be reallocated to the services that need them. In any case, this was quite some scale indeed.

EXECUTIVE SUMMARY

Scaled up to a 400Gigabit Link Aggregation Group running at line rate without packet loss. Measured out of service time from both recovery and restoration -- each at approximately eight milliseconds.

Even those not familiar with the technology, which has existed for longer than MPLS, could probably understand the point of a Link Aggregation Group (LAG) -- aggregating multiple links into a single logical group. The reason as to why one would do this is the next question, as it can be used both to aggregate bandwidth and provide link redundancy. The former was the main reason that Alcatel-Lucent chose to make this the second of its two vendor-suggested tests. At first we were a bit appalled at what seemed to be a flexing of muscle. Alcatel-Lucent then explained that it has learned (and, we hope, so have others) that a new interface type with plenty of bandwidth to satisfy our current needs will not satisfy them forever. It's true, some operators made this mistake when Gigabit Ethernet was introduced, and some again when they upgraded to 10Gigabit Ethernet. Data centers and Internet exchange points today are filled with groups of aggregated 10Gigabit Ethernet links, sometimes up to the maximum aggregation of 16 links -- already approaching a 200Gigabit pipe. It may not be too long before operators may have to aggregate 100Gigabit Ethernet links.

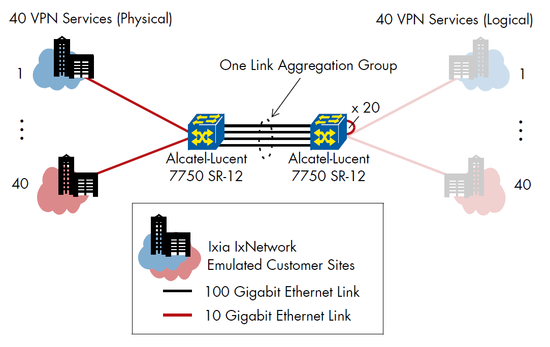

Alcatel-Lucent’s goal was to show that they are already prepared to provide a solution to have 400Gigabit capacity on a single logical link. Such a test was initially a logistics and hardware question. The solution included four 100GbE links connecting two 7750 SR-12 chassis, all configured in a single LAG. For simplicity, Alcatel-Lucent explained, no LACP (Link Aggregation Control Protocol) was configured -- a control protocol to provide information regarding remote link status for links within the LAG. The edge was built using 10Gigabit Ethernet links. On one 7750 SR-12 chassis, 40 of them were connected directly to the Ixia, while on the other 7750 SR-12 chassis, 40 were connected to each other. Weary of topologies involving loopback cables and snaking, we were relieved to find a realistic configuration from Alcatel-Lucent involving a topology with 40 unique Layer 3 VPN services configured on each chassis. On the “loopback” side, 20 pairs of links each had each other configured as the IP next hop. Since IP connectivity should indeed be separated within an L3VPN, it was no issue that IP networks overlapped. In fact, this is a feature.

To show stability, we left traffic running over the weekend. On the following Monday we verified that traffic had been running for just over 63 hours. Not a single packet was lost. Then came the question (actually it was anticipated and prepared for) how does the setup prove that the four core links indeed made up a single logical link? Now that line rate IMIX traffic had run for over 63 hours, we disabled 25 percent of traffic from the Ixia, bringing the load down to 300 Gbit/s in each direction. This allowed us to break a link without causing congestion -- theoretically at least. Alcatel-Lucent engineers showed us on the CLI how traffic had indeed used all four of the 100GbE links in the core -- each approximately 75 percent. We then removed a cable connecting two of the 100GbE interfaces and measured the loss. Using the rate, we calculated an out-of-service time of 7.951 milliseconds. We then replaced the cable while traffic was running, which experienced no loss. Indeed when the cable was reinserted almost immediately the link started to be used for traffic. This satisfied our sanity check, which proved the interfaces were in a LAG. Indeed, the test showed that these interfaces could be brought into a LAG just as the interfaces were more used to, and confirmed the speed of 400 Gbit/s in each direction (800 Gbit/s all together) that could be deployed on it. The results as you have seen were quite impressive. We were pleased that Alcatel-Lucent was able to take up the challenge of the various tests, and add their own to the mix proving the test session to be an interesting one indeed. The scalability question was undoubtedly answered, Alcatel-Lucent successfully passed the QoS verifications under their model, and multicast services scaled with almost no issues. EANTC did not publish specific latency numbers so as to avoid presenting this test as a bake-off between "ultra low latency" services. Such services are absolutely required, especially for the cloud, but were not the focus of this test. Finally, Alcatel-Lucent gave us a peek at the internal resources of the 7750 SR-12, and showed how the device is ready for the link aggregation needs of the future. You know, just in case Terabit Ethernet isn’t any cheaper than 100 Gigabit Ethernet. Alcatel-Lucent told us they’re looking “beyond 100 Gigabit Ethernet." We look forward to the testing.

The results as you have seen were quite impressive. We were pleased that Alcatel-Lucent was able to take up the challenge of the various tests, and add their own to the mix proving the test session to be an interesting one indeed. The scalability question was undoubtedly answered, Alcatel-Lucent successfully passed the QoS verifications under their model, and multicast services scaled with almost no issues. EANTC did not publish specific latency numbers so as to avoid presenting this test as a bake-off between "ultra low latency" services. Such services are absolutely required, especially for the cloud, but were not the focus of this test. Finally, Alcatel-Lucent gave us a peek at the internal resources of the 7750 SR-12, and showed how the device is ready for the link aggregation needs of the future. You know, just in case Terabit Ethernet isn’t any cheaper than 100 Gigabit Ethernet. Alcatel-Lucent told us they’re looking “beyond 100 Gigabit Ethernet." We look forward to the testing. — Carsten Rossenhövel is Managing Director of the European Advanced Networking Test Center AG (EANTC) , anindependent test lab in Berlin. EANTC offers vendor-neutral network testservices for manufacturers, service providers, governments and largeenterprises.Carsten heads EANTC's manufacturer testing and certification group andinteroperability test events. He has over 20 years of experience indata networks and testing.

— Carsten Rossenhövel is Managing Director of the European Advanced Networking Test Center AG (EANTC) , anindependent test lab in Berlin. EANTC offers vendor-neutral network testservices for manufacturers, service providers, governments and largeenterprises.Carsten heads EANTC's manufacturer testing and certification group andinteroperability test events. He has over 20 years of experience indata networks and testing.

Jonathan Morin, EANTC, managed the project, worked with vendors and co-authored the article.

You May Also Like